Introduction & Overview

In the fast-evolving world of software engineering, ensuring reliable, scalable, and rapid delivery of software is critical. Site Reliability Engineering (SRE) bridges the gap between development and operations, emphasizing automation, reliability, and scalability. A Deployment Pipeline is a cornerstone of this practice, enabling teams to automate the process of building, testing, and deploying code to production with confidence. This tutorial provides a detailed guide to understanding and implementing deployment pipelines within the SRE framework, complete with practical examples, best practices, and comparisons.

What is a Deployment Pipeline?

A deployment pipeline is an automated, repeatable process that takes code from version control to production, incorporating stages like building, testing, and deployment. It ensures that code changes are validated, secure, and reliably delivered to end-users, minimizing manual intervention and errors.

History or Background

The concept of deployment pipelines emerged with the rise of Continuous Integration (CI) and Continuous Deployment (CD) practices in the early 2000s, popularized by tools like Jenkins and the DevOps movement. Jez Humble and David Farley’s book, Continuous Delivery (2010), formalized the deployment pipeline as a structured approach to software delivery. In SRE, deployment pipelines align with the principle of automating toil, ensuring systems are reliable and scalable while supporting rapid iteration.

- 2006: Jez Humble and Dave Farley introduced Continuous Delivery and the idea of pipelines in their book Continuous Delivery.

- Pre-DevOps Era: Manual releases caused downtime, rollback issues, and poor reliability.

- DevOps & SRE Era: Deployment pipelines became the backbone of CI/CD, ensuring frequent, automated, and safe deployments.

- Today: Cloud-native, GitOps, and Kubernetes-based pipelines dominate modern infrastructures.

Why is it Relevant in Site Reliability Engineering?

In SRE, deployment pipelines are vital for:

- Reliability: Automated testing and validation reduce the risk of production failures.

- Scalability: Pipelines handle increasing code complexity and deployment frequency.

- Toil Reduction: Automation eliminates repetitive manual tasks, aligning with SRE’s focus on reducing operational overhead.

- Incident Prevention: Early detection of issues through testing improves system stability.

Core Concepts & Terminology

Key Terms and Definitions

- Continuous Integration (CI): Developers frequently merge code changes into a shared repository, triggering automated builds and tests.

- Continuous Deployment (CD): Automated deployment of validated code changes to production.

- Pipeline Stages: Sequential steps in the pipeline, such as source, build, test, and deploy.

- Artifacts: Compiled outputs (e.g., binaries, containers) stored for deployment.

- Canary Release: Gradual rollout of changes to a subset of users to minimize risk.

- Blue-Green Deployment: Running two identical environments (blue and green) to switch traffic seamlessly during deployment.

| Term | Definition |

|---|---|

| CI/CD | Continuous Integration and Continuous Deployment — the foundation of pipelines. |

| Stages | Sequential steps (e.g., Build → Test → Deploy → Monitor). |

| Artifacts | Packaged output (e.g., Docker image, JAR file). |

| Environment | Deployment target (Dev, Staging, Production). |

| Gatekeeping | Automated checks/tests before moving to the next stage. |

| Rollback | Reverting to a previous stable version after failure. |

| Blue/Green Deployment | Running two environments, switching traffic between them. |

| Canary Release | Deploying changes to a small subset of users before full rollout. |

How it Fits into the Site Reliability Engineering Lifecycle

In SRE, the deployment pipeline integrates with the following lifecycle phases:

- Development: Code is written and committed to version control.

- Validation: Automated tests ensure code quality and functionality.

- Deployment: Code is deployed to staging or production environments.

- Monitoring: Post-deployment metrics track system health and performance.

- Incident Response: Pipelines support rollbacks or hotfixes in case of failures.

Architecture & How It Works

Components and Internal Workflow

A deployment pipeline typically consists of:

- Version Control System (VCS): Stores code (e.g., Git, SVN).

- Build Server: Compiles code and generates artifacts (e.g., Jenkins, GitLab CI).

- Testing Framework: Runs unit, integration, and end-to-end tests (e.g., JUnit, Selenium).

- Artifact Repository: Stores build outputs (e.g., AWS CodeArtifact, Nexus).

- Deployment Tools: Manages deployment to environments (e.g., Kubernetes, AWS CodeDeploy).

- Monitoring and Logging: Tracks pipeline and application health (e.g., Prometheus, ELK Stack).

Workflow:

- Source Stage: Code is committed to the VCS, triggering the pipeline.

- Build Stage: Code is compiled, dependencies resolved, and artifacts generated.

- Test Stage: Automated tests (unit, integration, security) validate the build.

- Deploy Stage: Artifacts are deployed to staging or production environments.

- Monitor Stage: Metrics and logs ensure the deployment is stable.

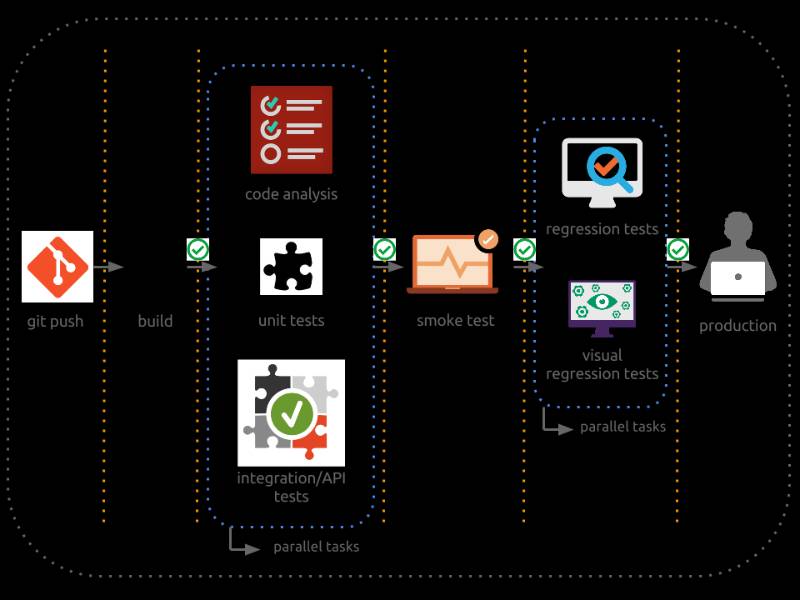

Architecture Diagram Description

The architecture diagram for a deployment pipeline in SRE can be visualized as a linear flow with interconnected components:

- VCS (Git) → Triggers the pipeline on code commit.

- Build Server (Jenkins/GitLab CI) → Compiles code and generates artifacts.

- Artifact Repository (Nexus/Artifactory) → Stores artifacts securely.

- Testing Environment → Runs automated tests in parallel (unit, integration, security).

- Staging Environment → Deploys artifacts for final validation.

- Production Environment → Uses deployment strategies (e.g., canary, blue-green) for rollout.

- Monitoring Tools (Prometheus/Grafana) → Tracks pipeline and application metrics.

[ Source Control ]

|

v

[ Build Stage ]

|

v

[ Test Stage ]

|

v

[ Artifact Repository ]

|

v

[ Staging Deployment ] ---> [ Monitoring & Logs ]

|

v

[ Approval / Canary ]

|

v

[ Production Deployment ]

Diagram Layout:

- A horizontal flowchart with arrows connecting each component.

- VCS on the left, followed by Build Server, Artifact Repository, Testing Environment, Staging, Production, and Monitoring on the right.

- Feedback loops from Testing and Monitoring back to VCS for error handling or rollbacks.

Integration Points with CI/CD or Cloud Tools

- CI/CD Tools: Jenkins, GitLab CI, CircleCI, or AWS CodePipeline orchestrate the pipeline.

- Cloud Platforms: AWS (CodeBuild, CodeDeploy), Google Cloud (Cloud Build, Deployment Manager), Azure DevOps.

- Containerization: Docker and Kubernetes for packaging and deploying applications.

- Observability: Prometheus, Grafana, or Datadog for real-time monitoring.

Installation & Getting Started

Basic Setup or Prerequisites

To set up a deployment pipeline for SRE:

- Version Control: Install Git and configure a repository (e.g., GitHub, GitLab).

- CI/CD Tool: Install Jenkins or use a cloud-based service like GitLab CI or AWS CodePipeline.

- Testing Frameworks: Install JUnit (Java), pytest (Python), or similar.

- Artifact Repository: Set up Nexus, Artifactory, or AWS CodeArtifact.

- Cloud/Deployment Platform: Access to AWS, GCP, Azure, or Kubernetes.

- Monitoring Tools: Install Prometheus and Grafana for observability.

Hands-on: Step-by-Step Beginner-Friendly Setup Guide

Let’s set up a basic deployment pipeline using Jenkins on a local machine or cloud instance.

- Install Jenkins:

# On Ubuntu

sudo apt update

sudo apt install openjdk-11-jdk

wget -q -O - https://pkg.jenkins.io/debian/jenkins.io.key | sudo apt-key add -

sudo sh -c 'echo deb http://pkg.jenkins.io/debian-stable binary/ > /etc/apt/sources.list.d/jenkins.list'

sudo apt update

sudo apt install jenkinsAccess Jenkins at http://localhost:8080 and complete the initial setup.

2. Configure Git Repository:

- Create a GitHub repository with a sample project (e.g., a Python Flask app).

- Add a

Jenkinsfileto define the pipeline:

pipeline {

agent any

stages {

stage('Build') {

steps {

sh 'python3 -m venv venv && source venv/bin/activate && pip install -r requirements.txt'

}

}

stage('Test') {

steps {

sh 'source venv/bin/activate && pytest tests/'

}

}

stage('Deploy') {

steps {

sh 'echo Deploying to staging'

// Add deployment commands (e.g., AWS CLI, kubectl)

}

}

}

}3. Set Up Jenkins Pipeline:

- In Jenkins, create a new pipeline job.

- Point it to your GitHub repository and select the

Jenkinsfile. - Install necessary plugins (e.g., Git, Pipeline).

4. Add Testing:

- Ensure your project includes tests (e.g., pytest for Python).

- Example test file (

tests/test_app.py):

def test_app():

assert True # Replace with actual tests5. Deploy to a Staging Environment:

- Use a cloud service like AWS Elastic Beanstalk or a local Docker container.

- Example deployment command:

aws elasticbeanstalk create-application-version --application-name my-app --version-label v16. Monitor the Pipeline:

- Install the Prometheus Jenkins plugin and configure Grafana for visualization.

Real-World Use Cases

- E-commerce Platform (High-Availability Retail):

- Scenario: An e-commerce company uses a deployment pipeline to roll out new features (e.g., a recommendation engine) during peak shopping seasons.

- Implementation: Jenkins pipeline with unit tests, integration tests with mock APIs, and canary deployments to Kubernetes. Monitoring with Prometheus ensures low latency.

- Outcome: Reduced deployment failures by 30% and improved customer experience during Black Friday sales.

- Financial Services (Regulatory Compliance):

- Scenario: A bank deploys a fraud detection system requiring HIPAA and PCI DSS compliance.

- Implementation: GitLab CI pipeline with security scans (e.g., SonarQube), blue-green deployments, and audit logging. Compliance checks are automated in the pipeline.

- Outcome: Ensured regulatory compliance while deploying updates weekly.

- Streaming Service (Real-Time Processing):

- Healthcare (Data Sensitivity):

- Scenario: A healthcare provider updates a patient management system with strict data privacy requirements.

- Implementation: Azure DevOps pipeline with end-to-end encryption, role-based access controls, and automated compliance checks.

- Outcome: Reduced data breach risks and ensured HIPAA compliance.

Benefits & Limitations

Key Advantages

- Automation: Reduces manual errors and speeds up delivery.

- Reliability: Automated testing catches issues early, ensuring stable releases.

- Scalability: Pipelines handle increased deployment frequency and complexity.

- Traceability: Version control and logging provide audit trails for compliance.

Common Challenges or Limitations

- Complexity: Managing multiple pipelines in large organizations can be challenging.

- Initial Setup Cost: Requires significant time and expertise to configure.

- Tool Integration: Compatibility issues between tools (e.g., Jenkins with Kubernetes) can arise.

- Security Risks: Misconfigured pipelines may expose sensitive data.

Best Practices & Recommendations

- Security Tips:

- Performance:

- Parallelize test execution to reduce pipeline runtime.

- Use caching for dependencies to speed up builds.

- Maintenance:

- Compliance Alignment:

- Automate compliance checks (e.g., GDPR, HIPAA) within the pipeline.

- Use tools like HashiCorp Vault for secrets management.

- Automation Ideas:

Comparison with Alternatives

| Feature | Deployment Pipeline | Manual Deployment | Serverless CI/CD (e.g., AWS Lambda) |

|---|---|---|---|

| Automation | High (fully automated) | Low (manual steps) | High (managed service) |

| Scalability | High | Low | Very High |

| Control | High (customizable) | High | Low (vendor-locked) |

| Setup Complexity | Moderate to High | Low | Low to Moderate |

| Cost | Medium (infrastructure) | Low (labor-intensive) | Variable (pay-per-use) |

| Use Case | Complex SRE workflows | Small projects | Rapid prototyping |

When to Choose a Deployment Pipeline

- Choose Deployment Pipeline: For large-scale, complex applications requiring reliability, compliance, and frequent releases (e.g., e-commerce, finance).

- Choose Alternatives: Manual deployment for small projects with infrequent changes; serverless CI/CD for rapid, low-maintenance prototyping.

Conclusion

Deployment pipelines are a critical enabler of Site Reliability Engineering, automating the software delivery process while ensuring reliability and scalability. By integrating with CI/CD tools, cloud platforms, and observability systems, pipelines empower SRE teams to deliver high-quality software with minimal toil. As organizations increasingly adopt cloud-native and AI-driven workloads, deployment pipelines will evolve to support real-time analytics and intelligent automation.

Next Steps:

- Explore tools like Jenkins, GitLab CI, or AWS CodePipeline for hands-on practice.

- Join SRE communities on platforms like Reddit or Slack for collaboration.

- Refer to official documentation:

- Jenkins

- AWS CodePipeline

- GitLab CI/CD