Introduction & Overview

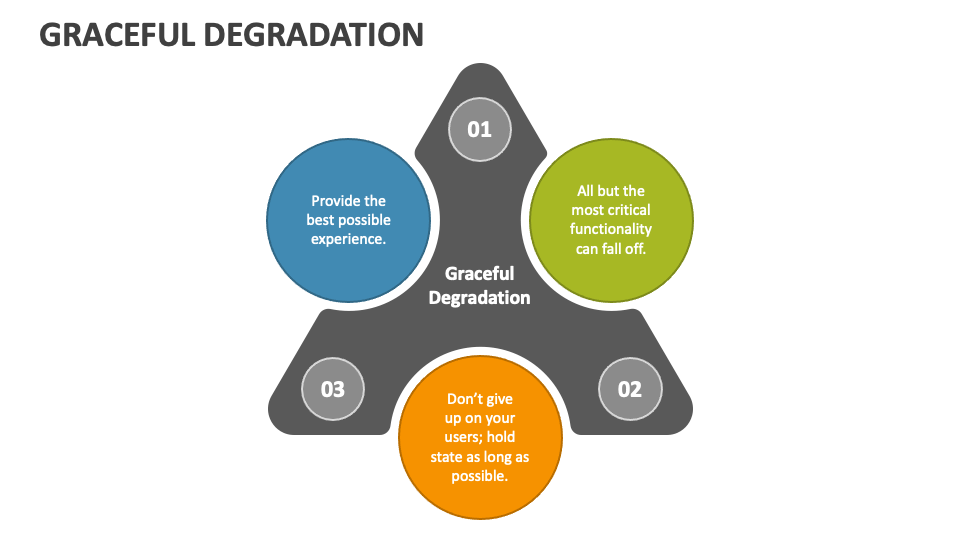

Graceful degradation is a fundamental design philosophy in Site Reliability Engineering (SRE) that ensures systems remain operational, albeit with reduced functionality, during failures or high-load scenarios. This approach prioritizes user experience and system availability by allowing critical functions to persist even when components fail or resources are strained. In SRE, graceful degradation is a cornerstone of building resilient, scalable, and user-centric systems.

What is Graceful Degradation?

Graceful degradation refers to a system’s ability to maintain limited functionality when parts of it fail or become overloaded, preventing catastrophic outages. Instead of crashing entirely, the system sacrifices non-critical features or performance to ensure core services remain available. For example, a search engine might return cached results during a database outage, ensuring users still receive a response.

- Definition: A design strategy where systems adapt to failures by reducing functionality or performance gracefully, maintaining core operations.

- Objective: Ensure system availability and user satisfaction under adverse conditions, avoiding complete service disruptions.

- Scope: Applies to distributed systems, web applications, cloud infrastructure, and microservices architectures.

History or Background

The concept of graceful degradation originated in fault-tolerant computing in the 1960s, with early applications in military and aerospace systems, such as ARPA’s efforts to create resilient networks during the Cold War. It gained prominence with the rise of distributed systems and cloud computing, where complex, multi-node architectures made complete failures impractical to manage. In SRE, popularized by Google’s practices, graceful degradation became a key strategy to meet stringent Service Level Agreements (SLAs) and maintain reliability in large-scale systems.

- 1960s–1980s: Emerged in fault-tolerant systems to ensure mission-critical operations (e.g., ARPANET).

- 1990s–2000s: Adopted in web design to support older browsers, later extended to distributed systems.

- Modern Era: Integral to SRE, DevSecOps, and cloud-native architectures, supported by tools like Istio, Kubernetes, and chaos engineering frameworks.

Why is it Relevant in Site Reliability Engineering?

In SRE, graceful degradation aligns with the goal of maintaining reliability and uptime while balancing operational complexity and cost. It ensures systems can handle unexpected failures, traffic spikes, or resource constraints without compromising user trust or business continuity. By designing for partial failures, SRE teams can meet SLAs, reduce incident severity, and enhance system resilience.

- Resilience: Mitigates cascading failures in distributed systems.

- User Experience: Preserves core functionality, reducing user frustration.

- Cost Efficiency: Avoids over-provisioning by handling failures intelligently.

- Compliance: Ensures critical services remain available during outages, aligning with regulatory requirements.

Core Concepts & Terminology

Key Terms and Definitions

| Term | Definition |

|---|---|

| Graceful Degradation | A system’s ability to maintain partial functionality during failures or overloads. |

| Fault Tolerance | The ability of a system to continue operating despite hardware or software failures. |

| Load Shedding | Dropping non-critical requests to reduce system load during high traffic. |

| Circuit Breaker | A pattern that halts requests to a failing service to prevent cascading failures. |

| Fallback Mechanism | Alternative responses (e.g., cached data) when primary services are unavailable. |

| Service Decomposition | Breaking systems into microservices to isolate failures. |

| Redundancy | Duplicating components to ensure availability if one fails. |

How It Fits into the Site Reliability Engineering Lifecycle

Graceful degradation is embedded in the SRE lifecycle, from design to incident response:

- Design Phase: Architect systems with redundancy, load balancing, and fallback mechanisms.

- Development: Implement degradation handlers and circuit breakers in code.

- Deployment: Use CI/CD pipelines to test degradation scenarios during releases.

- Monitoring: Observe system health to detect when degradation is triggered.

- Incident Response: Activate fallback modes during outages to maintain service.

- Postmortems: Analyze degradation effectiveness to improve future designs.

Architecture & How It Works

Components and Internal Workflow

Graceful degradation relies on several architectural components and patterns to manage failures effectively:

- Load Balancer: Distributes traffic across nodes, redirecting requests away from failing components.

- Service Mesh (e.g., Istio): Applies fallback rules and manages traffic routing during failures.

- Application Layer: Implements degradation logic, such as serving cached data or read-only modes.

- Monitoring & Alerting: Tracks system health and notifies SRE teams when degradation occurs.

- Circuit Breakers: Prevent overloading failing services by temporarily halting requests.

- Caching Layer: Stores recent data to serve during outages.

Workflow:

- A failure or overload is detected (e.g., database outage or traffic spike).

- The load balancer redirects traffic to healthy nodes or services.

- The service mesh applies fallback rules (e.g., route to secondary service).

- The application serves degraded responses (e.g., cached data or limited features).

- Monitoring tools log the event and alert SRE teams for resolution.

Architecture Diagram Description

The architecture for graceful degradation involves a multi-layered system:

- Client Layer: Users interact via browsers or APIs.

- API Gateway/Load Balancer: Routes requests to available services.

- Service Mesh: Manages traffic and applies degradation policies (e.g., Istio VirtualService).

- Application Services: Microservices with fallback logic (e.g., read-only mode).

- Data Layer: Primary and secondary databases or caches (e.g., Redis for fallback data).

- Monitoring: Tools like Prometheus or Google Cloud Monitoring track health.

Diagram (Text-Based Representation):

[Client Request] --> [API Gateway / Load Balancer]

|

v

[Service Mesh (e.g., Istio)] --> [Primary Service | Fallback Service]

|

v

[Application Layer: Degraded Mode (Cached Data, Read-Only)]

|

v

[Data Layer: Primary DB | Cache (Redis)]

|

v

[Monitoring & Alerting: Prometheus, Google Cloud Monitoring]

Integration Points with CI/CD or Cloud Tools

- CI/CD: Integrate degradation tests into pipelines using tools like Jenkins or GitHub Actions to simulate failures.

- Cloud Tools:

- AWS: Use Elastic Load Balancer (ELB) for traffic routing and AWS Lambda for fallback logic.

- Google Cloud: Leverage Google Cloud Armor for throttling and Cloud Monitoring for observability.

- Kubernetes: Use Istio for service mesh and Horizontal Pod Autoscaling for load management.

- Chaos Engineering: Tools like Chaos Monkey simulate failures to test degradation strategies.

Installation & Getting Started

Basic Setup or Prerequisites

To implement graceful degradation, you need:

- A distributed system (e.g., microservices architecture).

- A service mesh (e.g., Istio, Linkerd) for traffic management.

- A monitoring tool (e.g., Prometheus, Grafana).

- A caching solution (e.g., Redis, Memcached).

- A CI/CD pipeline for testing (e.g., Jenkins, CircleCI).

- Basic knowledge of Kubernetes and cloud platforms (AWS, GCP, Azure).

Hands-on: Step-by-Step Beginner-Friendly Setup Guide

This guide sets up graceful degradation in a Kubernetes cluster using Istio for a simple Node.js application.

const express = require('express');

const app = express();

// Primary service (may fail)

app.get('/recommendations', (req, res) => {

if (Math.random() > 0.7) { // simulate failure

return res.status(500).send("Service Unavailable");

}

res.send("Here are your recommendations!");

});

// Fallback route

app.use((err, req, res, next) => {

res.status(200).send("Fallback: Showing popular items instead.");

});

app.listen(3000, () => console.log('Service running on port 3000'));

Run:

node server.js

Test degraded mode by refreshing multiple times.

Real-World Use Cases

Scenario 1: E-Commerce Platform During Traffic Spikes

- Context: An e-commerce site experiences a traffic surge during a flash sale.

- Solution: The system sheds non-critical features (e.g., personalized recommendations) and serves cached product listings. Circuit breakers prevent database overload.

- Outcome: Core functionality (browsing and purchasing) remains available, maintaining revenue flow.

Scenario 2: Financial Service During IAM Outage

- Context: An external Identity Access Management (IAM) provider fails, blocking user authentication.

- Solution: The system switches to local token validation with limited privileges, allowing read-only access to account details.

- Outcome: Users can view balances, ensuring trust, while authentication is restored.

Scenario 3: CI/CD Pipeline with SAST Failure

- Context: A Static Application Security Testing (SAST) scanner fails during a CI build.

- Solution: The pipeline proceeds with a warning, flagging the build for manual review.

- Outcome: Development velocity is maintained without compromising security.

Scenario 4: Streaming Service During Log Delay

- Context: A streaming platform’s real-time logging system is delayed due to a spike.

- Solution: The system serves cached metadata and prioritizes video streaming over analytics.

- Outcome: Users experience uninterrupted streaming, with analytics processed later.

Benefits & Limitations

Key Advantages

| Benefit | Description |

|---|---|

| Enhanced Availability | Ensures core services remain operational during failures. |

| Improved User Experience | Provides partial functionality, reducing user frustration. |

| Fault Isolation | Prevents cascading failures by containing issues to specific components. |

| Cost Efficiency | Reduces need for over-provisioning by handling failures gracefully. |

Common Challenges or Limitations

| Challenge | Description |

|---|---|

| Complexity | Designing and testing degradation adds architectural complexity. |

| Data Consistency | Degraded modes may serve stale or inconsistent data, requiring validation. |

| Performance Overhead | Fallback mechanisms (e.g., caching) can introduce latency. |

| Testing Difficulty | Simulating all failure scenarios is resource-intensive and complex. |

Best Practices & Recommendations

Security Tips

- Secure Fallbacks: Ensure degraded modes (e.g., read-only) adhere to security policies, avoiding default allow states.

- Zero-Trust Integration: Use zero-trust principles to validate requests in degraded modes.

- Audit Logs: Maintain logs of degradation events for compliance and analysis.

Performance

- Optimize Caching: Use in-memory caches like Redis to minimize latency in fallback modes.

- Load Shedding: Prioritize critical requests by throttling non-essential ones during spikes.

- Circuit Breakers: Implement circuit breakers to prevent overloading failing services.

Maintenance

- Regular Testing: Use chaos engineering (e.g., Chaos Monkey) to validate degradation strategies.

- Monitoring: Set up alerts for degradation events using tools like Prometheus.

- Postmortems: Analyze degradation incidents to refine fallback logic.

Compliance Alignment

- Align with regulations (e.g., GDPR, HIPAA) by ensuring degraded modes protect sensitive data.

- Use policy-as-code (e.g., Open Policy Agent) to enforce compliance during degradation.

Automation Ideas

- Automate fallback activation using AI-driven anomaly detection.

- Integrate degradation tests into CI/CD pipelines for continuous validation.

Comparison with Alternatives

Comparison Table

| Approach | Graceful Degradation | Progressive Enhancement | Fault Tolerance |

|---|---|---|---|

| Definition | Maintains partial functionality during failures | Builds from basic functionality upward | Ensures no downtime despite failures |

| Focus | Fallbacks and reduced features | Baseline functionality with enhancements | Redundancy and failover |

| Complexity | Moderate (requires fallback logic) | Low (starts simple, adds complexity) | High (requires full redundancy) |

| Use Case | High-traffic systems, microservices | Web apps with diverse browsers | Mission-critical systems (e.g., banking) |

| Performance Impact | Possible latency from fallbacks | Minimal, as enhancements are optional | High resource usage for redundancy |

When to Choose Graceful Degradation

- Choose Graceful Degradation: When partial functionality is acceptable, and you want to balance cost and reliability in distributed systems.

- Choose Alternatives:

- Progressive Enhancement: For web apps needing broad browser compatibility.

- Fault Tolerance: For systems requiring zero downtime (e.g., financial transactions).

Conclusion

Graceful degradation is a critical strategy in SRE for building resilient systems that maintain user trust and business continuity during failures. By leveraging architectural patterns like redundancy, load balancing, and circuit breakers, SRE teams can ensure systems degrade gracefully under stress. While it introduces complexity, the benefits of availability and fault isolation make it indispensable for modern distributed systems.

Future Trends

- AI-Driven Degradation: Machine learning models to predict and automate fallback decisions.

- Zero-Trust Integration: Tighter coupling with zero-trust security for secure degradation.

- Chaos Engineering: Increased adoption of tools like Gremlin for proactive failure testing.

Next Steps

- Explore chaos engineering to test degradation strategies.

- Implement monitoring and alerting to track degradation events.

- Review Google Cloud’s Well-Architected Framework for reliability best practices.