Introduction & Overview

What is a Content Delivery Network (CDN)?

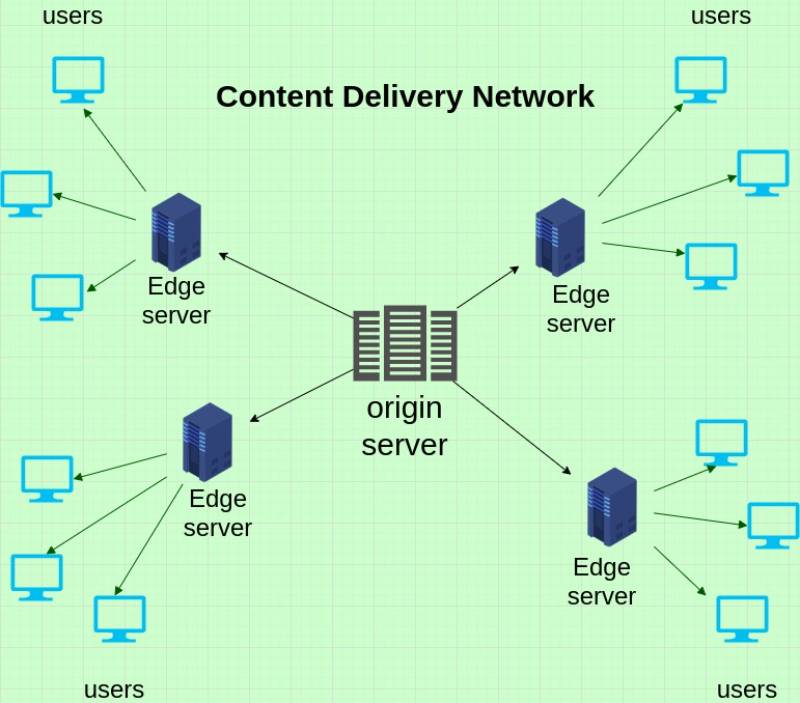

A Content Delivery Network (CDN) is a geographically distributed network of servers designed to deliver web content and resources to users with high availability, low latency, and enhanced performance. CDNs cache content such as web pages, images, videos, JavaScript, and CSS files at edge locations closer to end users. By reducing the physical distance data travels, CDNs minimize latency and offload traffic from origin servers, ensuring faster and more reliable content delivery.

History or Background

CDNs emerged in the late 1990s to address the growing demand for faster content delivery as websites became more complex with multimedia and dynamic elements. Akamai Technologies, founded in 1998, pioneered the CDN concept by deploying edge servers to cache static content. Over time, CDNs evolved to handle dynamic content, streaming media, and security features like DDoS protection and Web Application Firewalls (WAFs). Today, providers like Cloudflare, AWS CloudFront, and Fastly power much of the internet’s content delivery infrastructure.

- 1990s: Websites relied on single data centers → latency issues for global users.

- 1998: Akamai pioneered CDN technology.

- 2000s: Media streaming, e-commerce, and gaming adopted CDNs for scaling.

- 2010s–present: Cloud providers (AWS CloudFront, Azure CDN, Cloudflare, Fastly) made CDNs a core infrastructure component.

Why is it Relevant in Site Reliability Engineering?

In Site Reliability Engineering (SRE), CDNs are critical for achieving scalability, reliability, and performance goals. They align with SRE principles by:

- Reducing Latency: Edge servers deliver content from locations closer to users, minimizing response times.

- Improving Availability: Distributed architecture ensures content availability even if the origin server fails.

- Scaling Efficiently: CDNs handle traffic spikes by offloading requests from origin servers.

- Enhancing Security: Built-in features like DDoS mitigation and TLS encryption protect applications.

CDNs enable SREs to meet Service Level Objectives (SLOs) for uptime and latency while reducing operational toil through automation and monitoring.

Core Concepts & Terminology

Key Terms and Definitions

- Edge Server: A server located at a Point of Presence (POP) that caches content and serves it to users in a specific region.

- Origin Server: The primary server hosting the original content, such as a web server or cloud storage bucket.

- Cache Hit/Miss: A cache hit occurs when requested content is found in the edge server’s cache; a miss occurs when the edge server must fetch it from the origin.

- Time to Live (TTL): The duration content remains cached before it expires and needs refreshing.

- Purge: The process of removing specific or outdated content from the CDN cache.

- Point of Presence (POP): A physical location hosting edge servers, typically in major cities worldwide.

- Anycast DNS: A routing technique that directs user requests to the nearest or best-performing edge server.

| Term | Definition | Relevance in SRE |

|---|---|---|

| Edge Server | A CDN server close to the user | Minimizes latency |

| PoP (Point of Presence) | CDN server location in a city/region | Improves global coverage |

| Origin Server | The main application server | Source of truth for content |

| Caching | Storing static/dynamic responses temporarily | Reduces origin load |

| TTL (Time To Live) | Duration a cached file is stored before refresh | Balances freshness & performance |

| Load Balancing | Distributes traffic across multiple servers | High availability |

| DDoS Mitigation | Blocking malicious traffic | Security & reliability |

| Anycast Routing | Same IP served from multiple PoPs | Faster routing |

How it Fits into the Site Reliability Engineering Lifecycle

CDNs integrate into the SRE lifecycle at multiple stages:

- Design: Plan for global content delivery by selecting a CDN with adequate POP coverage.

- Deployment: Integrate CDNs with CI/CD pipelines to automate content updates and cache purges.

- Monitoring: Track metrics like cache hit ratios, latency, and error rates to ensure performance aligns with SLOs.

- Incident Response: Leverage CDNs for failover during origin outages or to mitigate DDoS attacks.

Architecture & How It Works

Components and Internal Workflow

A CDN comprises several key components:

- Origin Server: Hosts the original content, such as a web server or cloud storage (e.g., AWS S3, Google Cloud Storage).

- Edge Servers: Cache content at POPs and serve it to users, reducing latency.

- DNS Routing: Uses Anycast or GeoDNS to direct user requests to the nearest or least-loaded edge server.

- Cache Management: Controls content storage, expiration (via TTL), and purging.

- Security Layer: Provides features like WAF, DDoS protection, and TLS/SSL encryption.

Workflow:

- A user requests content (e.g., a webpage or video) via a browser.

- The CDN’s DNS resolves the request to the nearest edge server based on the user’s location.

- If the content is cached (cache hit), the edge server delivers it immediately.

- If the content is not cached (cache miss), the edge server fetches it from the origin, caches it locally, and delivers it to the user.

- The origin server is updated only when cache expires or content is purged.

Architecture Diagram (Textual Description)

The CDN architecture can be described as a layered system:

- Users: End users accessing content from various global locations (e.g., New York, Tokyo, Mumbai).

- DNS Layer: A global DNS system routes requests to the nearest POP using Anycast or GeoDNS.

- Points of Presence (POPs): Geographically distributed data centers hosting edge servers (e.g., 300+ POPs for Cloudflare globally).

- Edge Servers: Cache static and dynamic content, connected to the origin via a high-speed backbone network.

- Origin Server: A central server (e.g., AWS EC2, Azure VM) hosting the original content.

- Connections: Secure HTTPS/TLS links between users, edge servers, and the origin server ensure encrypted data transfer.

+--------------------+

| Origin Server |

+--------------------+

^

|

-----------------+-----------------

| |

+------------------+ +------------------+

| CDN Edge PoP 1 | <----> | CDN Edge PoP 2 |

+------------------+ +------------------+

^ ^

| |

+----------+ +----------+

| Users | | Users |

+----------+ +----------+

The flow resembles a tree: users connect to the nearest POP, which either serves cached content or fetches it from the origin via the backbone.

Integration Points with CI/CD or Cloud Tools

CDNs integrate seamlessly with modern DevOps and cloud tools:

- CI/CD Pipelines: Automate cache purges using APIs (e.g., Cloudflare API, AWS CloudFront CLI) during deployments.

- Cloud Storage: Sync content from AWS S3, Google Cloud Storage, or Azure Blob Storage to the CDN.

- Monitoring Tools: Integrate with Datadog, Prometheus, or New Relic to monitor cache hit ratios, latency, and errors.

- Infrastructure as Code: Use Terraform or AWS CDK to provision and manage CDN configurations.

Installation & Getting Started

Basic Setup or Prerequisites

To set up a CDN, you need:

- A website or application hosted on an origin server (e.g., AWS EC2, GCP Compute Engine, or a static site on S3).

- A registered domain name with access to DNS management.

- An account with a CDN provider (e.g., Cloudflare, AWS CloudFront, Akamai, Fastly).

- An SSL/TLS certificate for secure content delivery (most providers offer free certificates).

- Basic knowledge of DNS configuration and HTTP protocols.

Hands-on: Step-by-Step Beginner-Friendly Setup Guide

This example uses AWS CloudFront to set up a CDN for a static website hosted on an S3 bucket.

- Create a CloudFront Distribution:

- Log in to the AWS Management Console.

- Navigate to CloudFront > Create Distribution.

- Set the origin domain to your S3 bucket (e.g.,

my-website-bucket.s3.amazonaws.com) or web server URL. - Specify the origin path if needed (e.g.,

/staticfor specific folders).

2. Configure Settings:

- Set cache behavior: Define TTL (e.g., 24 hours for static assets) and enable query string forwarding if needed.

- Enable HTTPS: Select a custom SSL certificate or use AWS Certificate Manager (ACM).

- Configure default behavior: Set the viewer protocol policy to “Redirect HTTP to HTTPS.”

- Optionally, enable WAF for security or geo-restrictions for compliance.

3. Update DNS:

- After creating the distribution, note the CloudFront domain (e.g.,

d1234.cloudfront.net). - In your DNS provider (e.g., Route 53, GoDaddy), create a CNAME record pointing your domain (e.g.,

cdn.mydomain.com) to the CloudFront domain. - Wait for DNS propagation (up to 24 hours).

4. Test the Setup:

- Access your website via the CDN URL (e.g.,

cdn.mydomain.com). - Verify content delivery by checking browser load times.

- Monitor cache hit ratios and latency in the CloudFront dashboard.

Code Snippet: Purge the CloudFront cache using AWS CLI after content updates:

aws cloudfront create-invalidation --distribution-id E1234567890 --paths "/*"Note: Replace E1234567890 with your distribution ID and adjust paths as needed.

Real-World Use Cases

CDNs are applied across industries to solve SRE challenges. Here are four scenarios:

- E-commerce Platform:

- Scenario: A global retailer experiences slow page loads during Black Friday sales due to high traffic.

- CDN Application: Uses Cloudflare to cache product images, CSS, and JavaScript, reducing page load times by 40%. The CDN handles traffic spikes, ensuring 99.9% uptime.

- Impact: Improved user experience and increased conversion rates.

2. Streaming Service:

- Scenario: A video streaming platform needs low-latency playback for millions of users worldwide.

- CDN Application: Leverages Akamai to cache video segments at edge servers, reducing buffering. Dynamic content acceleration ensures smooth API responses for user authentication.

- Impact: Enhanced streaming quality and reduced origin server load.

3. SaaS Application:

- Scenario: A SaaS provider faces API latency issues and occasional DDoS attacks.

- CDN Application: Uses Fastly to cache static assets and protect APIs with a WAF. GeoDNS routes users to the nearest POP, improving response times.

- Impact: Higher reliability and protection against security threats.

4. Gaming Industry:

- Scenario: An online gaming company needs to distribute large game patches quickly.

- CDN Application: Employs AWS CloudFront to cache patches at edge locations, minimizing download times for players in different regions.

- Impact: Faster updates and improved player satisfaction.

Benefits & Limitations

Key Advantages

- Faster Content Delivery: Edge servers reduce latency by serving content from locations closer to users.

- Scalability: CDNs handle traffic surges without overloading origin servers, ideal for flash sales or viral content.

- Enhanced Security: Features like DDoS protection, WAF, and TLS encryption safeguard applications.

- Cost Efficiency: Reduces bandwidth costs by offloading traffic from origin servers.

Common Challenges or Limitations

- Cache Invalidation Delays: Purging outdated content can take time, leading to stale data being served.

- Complex Configuration: Misconfigurations (e.g., incorrect TTL or cache rules) can cause downtime or performance issues.

- Cost for High Traffic: Large-scale usage, especially for dynamic content, can incur significant costs.

- Limited Dynamic Content Support: CDNs are less effective for highly dynamic, user-specific content.

Table: Benefits vs. Limitations

| Benefits | Limitations |

|-----------------------------|-----------------------------------|

| Low latency | Cache invalidation delays |

| Scalability | Complex setup |

| Enhanced security | Cost for high traffic |

| Cost-efficient bandwidth | Limited dynamic content support |Best Practices & Recommendations

Security Tips, Performance, Maintenance

- Security:

- Enable WAF to block malicious requests (e.g., SQL injection, XSS).

- Use signed URLs or cookies for restricted content access.

- Restrict origin server access to CDN IPs only to prevent direct attacks.

- Performance:

- Optimize TTL settings: Use longer TTLs for static assets (e.g., images) and shorter TTLs for dynamic content.

- Compress assets (e.g., Gzip, Brotli) before caching to reduce transfer size.

- Enable HTTP/2 or QUIC for faster protocol performance.

- Maintenance:

- Automate cache purges using CI/CD pipelines for content updates.

- Monitor cache hit ratios and latency using tools like Prometheus or CloudWatch.

- Regularly audit CDN configurations for compliance and performance.

Compliance Alignment, Automation Ideas

- Compliance:

- Configure geo-restrictions to comply with GDPR, HIPAA, or regional data laws.

- Use audit logs to track access and ensure compliance with regulations.

- Automation:

- Use Infrastructure as Code (IaC) tools like Terraform or AWS CDK to provision CDNs.

- Automate monitoring alerts for low cache hit ratios or high error rates.

Code Snippet: Terraform configuration for AWS CloudFront:

resource "aws_cloudfront_distribution" "cdn" {

origin {

domain_name = "mybucket.s3.amazonaws.com"

origin_id = "S3Origin"

}

enabled = true

default_cache_behavior {

target_origin_id = "S3Origin"

viewer_protocol_policy = "redirect-to-https"

min_ttl = 0

default_ttl = 86400

max_ttl = 31536000

}

}Comparison with Alternatives

How it Compares with Similar Tools or Approaches

CDNs are often compared to load balancers and reverse proxies, but they serve distinct purposes.

Table: CDN vs. Alternatives

| Feature | CDN | Load Balancer | Reverse Proxy |

|---------------------|--------------------------|--------------------------|-------------------------|

| Primary Use | Content caching | Traffic distribution | Request routing |

| Global Reach | Yes (multiple POPs) | Limited (regional) | Limited (single server) |

| Cache Support | Yes | No | Partial |

| Security Features | WAF, DDoS protection | Basic (e.g., SSL) | WAF, limited |When to Choose a CDN

- Choose a CDN: For delivering static content (images, videos, CSS, JS) or accelerating dynamic content with caching. Ideal for global audiences and high-traffic scenarios.

- Choose a Load Balancer: For distributing dynamic API traffic or load across backend servers in a single region.

- Choose a Reverse Proxy: For fine-grained request routing, authentication, or rate limiting at the application layer.

Conclusion

Content Delivery Networks are a cornerstone of modern SRE practices, enabling scalable, reliable, and secure content delivery. By caching content at edge locations, CDNs reduce latency, handle traffic spikes, and enhance security, aligning with SRE goals of high availability and performance. As internet usage grows, CDNs are evolving with AI-driven optimizations, advanced security features, and support for edge computing.

Next Steps:

- Experiment with free tiers from providers like Cloudflare or AWS CloudFront.

- Integrate CDNs into your CI/CD pipelines for automated deployments.

- Monitor performance metrics to optimize cache efficiency and user experience.

Resources:

- Cloudflare Documentation: https://www.cloudflare.com/developer-platform

- AWS CloudFront Documentation: https://aws.amazon.com/cloudfront

- Akamai Documentation: https://www.akamai.com

- Fastly Community: https://www.fastly.com