Introduction & Overview

What is Canary Deployment?

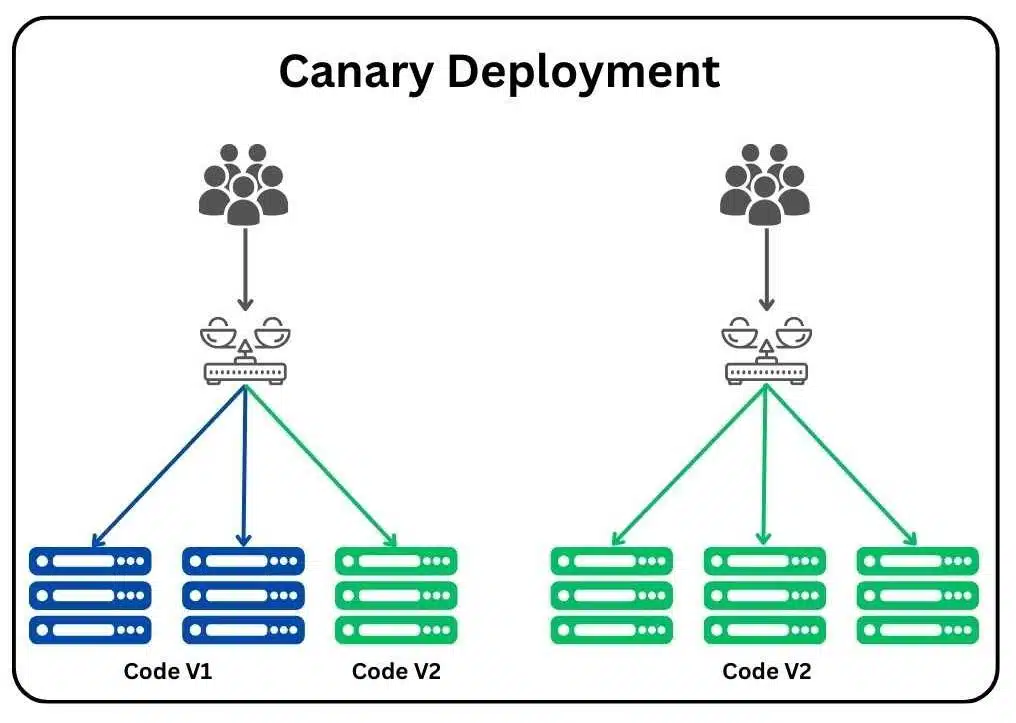

Canary deployment is a software release strategy where a new version of an application is rolled out to a small subset of users or servers before a full deployment. This approach, named after the “canary in a coal mine,” tests the new version in a controlled environment to minimize risks to the entire user base.

History or Background

The term “canary deployment” draws from the historical practice of miners using canaries to detect toxic gases. In software, it emerged in the early 2000s with the rise of continuous delivery and DevOps. Companies like Google and Netflix popularized it to maintain high availability during updates. Today, it’s a cornerstone of Site Reliability Engineering (SRE) for managing large-scale, distributed systems.

- Term Origin: Inspired by miners who carried canaries to detect toxic gases.

- Software Adoption: Popularized by companies like Google, Netflix, and Facebook as part of progressive delivery strategies.

- Shift from Monoliths to Microservices: With CI/CD pipelines and cloud-native applications, Canary Deployments became critical for risk-free releases.

Why is it Relevant in Site Reliability Engineering?

Canary deployments align with SRE’s focus on reliability and minimal downtime by:

- Reducing Risk: Testing changes on a small scale prevents widespread outages.

- Enhancing Observability: Monitoring a subset provides insights into performance and errors.

- Supporting Automation: Integrates with CI/CD pipelines for seamless updates.

- Improving User Experience: Gradual rollouts minimize disruptions.

Core Concepts & Terminology

Key Terms and Definitions

- Canary Release: A new application version deployed to a small group of users or servers.

- Baseline: The stable, existing version of the application in production.

- Traffic Splitting: Routing a percentage of user traffic to the canary release.

- Rollback: Reverting to the baseline if the canary fails.

- Metrics and Observability: Tools like Prometheus or Grafana to track performance, errors, and latency.

| Term | Definition |

|---|---|

| Canary Release | Gradual rollout of new version to a small subset before full deployment. |

| Traffic Shifting | Routing a percentage of user traffic to the new version. |

| Baseline | The stable version currently running in production. |

| Rollback | Reverting to the previous stable version if canary fails. |

| SLO (Service Level Objective) | Performance/reliability target for canary validation. |

| Error Budget | Tolerance level of errors/failures during release. |

| Observability Metrics | Latency, error rate, CPU/memory usage monitored during canary testing. |

How it Fits into the Site Reliability Engineering Lifecycle

Canary deployments support the SRE lifecycle in:

- Release Engineering: Ensures safe, gradual rollouts.

- Incident Response: Enables quick rollback if issues arise.

- Capacity Planning: Tests resource demands of new versions.

- Monitoring and Alerting: Validates changes via real-time metrics.

Architecture & How It Works

Components and Internal Workflow

A canary deployment involves:

- Application Instances: Baseline and canary versions running in parallel.

- Load Balancer: Distributes traffic between baseline and canary (e.g., 90% baseline, 10% canary).

- Monitoring Tools: Collect metrics like error rates, latency, and CPU usage.

- Automation Scripts: CI/CD pipelines to deploy, monitor, and rollback if needed.

- Workflow:

- Deploy the canary to a small subset of servers.

- Route a fraction of traffic to the canary.

- Monitor metrics for anomalies.

- If successful, scale up traffic to the canary; if not, rollback.

Architecture Diagram

Below is a textual description of the architecture (as images cannot be generated):

- Users send requests to a Load Balancer (e.g., NGINX, AWS ALB).

- The load balancer splits traffic: 90% to Baseline Servers (v1.0) and 10% to Canary Servers (v2.0).

- Both server groups connect to a Shared Database and Caching Layer (e.g., Redis).

- Monitoring Tools (e.g., Prometheus, Grafana) collect metrics from both server groups.

- A CI/CD Pipeline (e.g., Jenkins, GitLab) manages deployment and rollback.

Integration Points with CI/CD or Cloud Tools

- CI/CD: Tools like Jenkins, GitLab CI, or CircleCI automate canary deployments.

- Cloud Platforms: AWS (ALB, ECS), GCP (Cloud Run), or Kubernetes (via Istio or Linkerd) support traffic splitting.

- Observability: Prometheus, Grafana, or Datadog for monitoring; ELK Stack for logging.

Installation & Getting Started

Basic Setup or Prerequisites

- Infrastructure: Kubernetes cluster, AWS ECS, or similar platform.

- Tools: CI/CD pipeline (e.g., Jenkins), monitoring (e.g., Prometheus), load balancer (e.g., NGINX).

- Skills: Familiarity with containerization (Docker) and orchestration (Kubernetes).

- Environment: Access to a staging or production environment with monitoring setup.

Hands-on: Step-by-Step Beginner-Friendly Setup Guide

This guide sets up a canary deployment in Kubernetes using Istio for traffic management.

- Install Kubernetes and Istio:

# Install Minikube (local Kubernetes)

minikube start

# Install Istio

istioctl install --set profile=demo -y- Deploy Baseline Application:

# baseline-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: app-baseline

spec:

replicas: 3

selector:

matchLabels:

app: my-app

version: v1

template:

metadata:

labels:

app: my-app

version: v1

spec:

containers:

- name: app

image: my-app:1.0

ports:

- containerPort: 8080- Deploy Canary Application:

# canary-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: app-canary

spec:

replicas: 1

selector:

matchLabels:

app: my-app

version: v2

template:

metadata:

labels:

app: my-app

version: v2

spec:

containers:

- name: app

image: my-app:2.0

ports:

- containerPort: 8080- Configure Istio for Traffic Splitting:

# virtual-service.yaml

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: my-app

spec:

hosts:

- my-app

http:

- route:

- destination:

host: my-app

subset: v1

weight: 90

- destination:

host: my-app

subset: v2

weight: 10- Apply Configurations:

kubectl apply -f baseline-deployment.yaml

kubectl apply -f canary-deployment.yaml

kubectl apply -f virtual-service.yaml- Monitor Metrics:

- Use Prometheus to track error rates and latency.

- Example query:

rate(http_requests_total{version="v2"}[5m]). - If metrics indicate issues, rollback by updating the VirtualService to 100% v1 traffic.

Real-World Use Cases

Scenario 1: E-Commerce Platform

- Context: A retailer updates its checkout service to handle increased holiday traffic.

- Application: Deploy the new version to 5% of users, monitor cart abandonment rates, and scale up if stable.

- Outcome: Prevents outages during peak shopping periods.

Scenario 2: Streaming Service

- Context: A video platform introduces a new streaming codec.

- Application: Canary deployment to 10% of users in one region, monitoring buffer rates and quality metrics.

- Outcome: Validates codec performance before global rollout.

Scenario 3: Financial Services

- Context: A bank updates its transaction processing system.

- Application: Canary to 1% of transactions, with strict monitoring for errors or latency.

- Outcome: Ensures compliance and reliability for sensitive operations.

Scenario 4: SaaS Application

- Context: A SaaS provider rolls out a new dashboard feature.

- Application: Canary to a subset of enterprise users, tracking engagement metrics.

- Outcome: Identifies UI bugs before full release.

Benefits & Limitations

Key Advantages

- Risk Mitigation: Limits impact of failures to a small user base.

- Real-World Testing: Validates changes in production-like conditions.

- Scalability: Gradually increases traffic to ensure system stability.

- Automation-Friendly: Seamlessly integrates with CI/CD pipelines.

Common Challenges or Limitations

- Complexity: Requires robust monitoring and traffic management tools.

- Resource Overhead: Running multiple versions increases infrastructure costs.

- Data Consistency: Database schema changes may complicate canary deployments.

- User Experience: Inconsistent behavior if canary and baseline differ significantly.

| Aspect | Advantage | Limitation |

|---|---|---|

| Risk | Minimizes outages | Requires rollback mechanisms |

| Testing | Real-world validation | Needs advanced monitoring |

| Scalability | Gradual rollout | Increased resource usage |

| User Experience | Seamless updates | Potential inconsistency |

Best Practices & Recommendations

Security Tips

- Restrict canary access to specific user groups or regions.

- Encrypt traffic between load balancers and canary instances.

- Monitor for security anomalies (e.g., unexpected API calls).

Performance

- Use lightweight canary instances to minimize resource usage.

- Optimize monitoring queries for real-time insights.

- Set thresholds for automatic rollback (e.g., error rate > 1%).

Maintenance

- Regularly update monitoring dashboards to reflect new metrics.

- Automate rollback scripts to reduce manual intervention.

- Document canary processes for team alignment.

Compliance Alignment

- Ensure canary deployments comply with regulations (e.g., GDPR for EU users).

- Log all canary actions for audit trails.

- Validate data privacy during canary testing.

Automation Ideas

- Use tools like Argo Rollouts for Kubernetes-based canary automation.

- Integrate with observability platforms for automated alerts.

- Implement feature flags to toggle canary features dynamically.

Comparison with Alternatives

| Approach | Canary Deployment | Blue-Green Deployment | A/B Testing |

|---|---|---|---|

| Definition | Gradual rollout to subset of users/servers | Switch between two full environments | Test different versions for user behavior |

| Risk | Low (limited exposure) | Medium (full switch) | Low (user-focused) |

| Complexity | Moderate (traffic splitting, monitoring) | High (duplicate environments) | Moderate (user segmentation) |

| Use Case | Validate stability/performance | Zero-downtime releases | Optimize user experience |

| Rollback | Easy (adjust traffic) | Easy (switch back) | Complex (user-specific) |

When to Choose Canary Deployment

- Choose Canary When: You need to validate performance or stability in production with minimal risk.

- Choose Alternatives When:

- Blue-Green for zero-downtime requirements with simpler rollback.

- A/B Testing for experimenting with user-facing features.

Conclusion

Canary deployments are a powerful SRE strategy for safe, reliable software releases. By testing changes on a small scale, teams can ensure stability while embracing continuous delivery. Future trends include AI-driven canary analysis for predictive rollback and tighter integration with serverless architectures.

Next Steps

- Experiment with canary deployments in a staging environment.

- Explore tools like Istio, Argo Rollouts, or AWS CodeDeploy.

- Join SRE communities for best practices and case studies.

Resources

- Official Kubernetes Docs: https://kubernetes.io/docs/concepts/workloads/

- Istio Documentation: https://istio.io/latest/docs/

- Prometheus: https://prometheus.io/docs/

- SRE Community: https://sre.google/community/

This tutorial provides a detailed, actionable guide for implementing canary deployments in SRE, balancing theory, practical steps, and real-world insights.