Introduction & Overview

Rate limiting is a critical technique in Site Reliability Engineering (SRE) to ensure system stability, reliability, and security by controlling the rate of incoming requests to a service. This tutorial provides a detailed exploration of rate limiting, its implementation, and its significance in SRE practices.

What is Rate Limiting?

Rate limiting restricts the number of requests a user, client, or service can make to a system within a specific time frame. It prevents overuse, protects against abuse, and ensures equitable resource distribution.

- Purpose: Mitigates denial-of-service (DoS) attacks, maintains performance, and ensures fair usage.

- Applications: APIs, web servers, microservices, and cloud infrastructure.

History or Background

Rate limiting emerged with the growth of internet services in the early 2000s, driven by the need to protect APIs and web applications from abuse. Early implementations were simple, like IP-based request caps, but modern systems use sophisticated algorithms like token buckets and sliding windows, integrated into cloud platforms and API gateways.

Why is it Relevant in Site Reliability Engineering?

In SRE, rate limiting aligns with the principles of reliability, scalability, and availability:

- Prevents Overload: Protects services from traffic spikes, ensuring uptime.

- Resource Management: Optimizes resource allocation for predictable performance.

- Security: Mitigates malicious activities like DDoS attacks.

- User Experience: Ensures fair access, reducing latency for legitimate users.

Core Concepts & Terminology

Key Terms and Definitions

| Term | Definition |

|---|---|

| Rate Limit | Maximum number of requests allowed in a given time period. |

| Token Bucket | Algorithm where tokens are added at a fixed rate; requests consume tokens. |

| Leaky Bucket | Algorithm that smooths out traffic by processing requests at a fixed rate. |

| Sliding Window | Tracks requests over a moving time window for precise limiting. |

| Throttling | Slowing down or queuing requests when limits are exceeded. |

| Quota | Total allowed requests per user or client over a period (e.g., daily). |

How It Fits into the SRE Lifecycle

Rate limiting is integral to multiple SRE lifecycle stages:

- Design: Architect systems with rate limiting to handle traffic surges.

- Implementation: Integrate with API gateways or load balancers.

- Monitoring: Track request rates and limit triggers for observability.

- Incident Response: Mitigate outages caused by excessive traffic.

- Postmortems: Analyze rate-limiting failures to improve configurations.

Architecture & How It Works

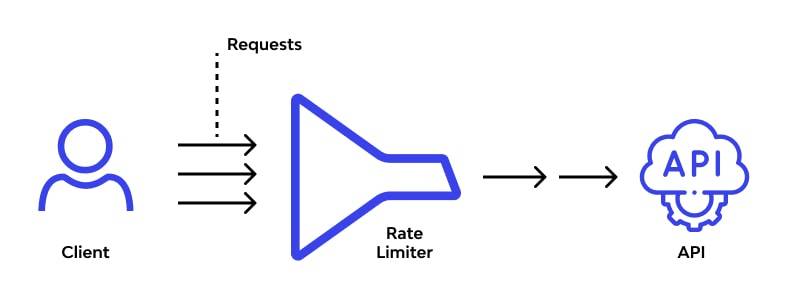

Components

- Client: Sends requests (e.g., users, bots, or services).

- Rate Limiter: Middleware or service enforcing limits (e.g., API gateway, Nginx).

- Backend Service: Processes requests if allowed by the rate limiter.

- Storage Layer: Tracks request counts (e.g., Redis for distributed systems).

- Monitoring System: Logs metrics for analysis (e.g., Prometheus, Grafana).

Internal Workflow

- Request Arrival: Client sends a request to the service endpoint.

- Rate Limit Check: Rate limiter evaluates request against defined policies (e.g., tokens available).

- Decision: Allow request, throttle, or reject with HTTP 429 (Too Many Requests).

- Storage Update: Update request counts in storage (e.g., Redis counter).

- Monitoring: Log metrics for observability and alerting.

Architecture Diagram Description

The architecture involves a layered approach:

- Client Layer: Users or services sending HTTP requests.

- Rate Limiter Layer: API gateway (e.g., AWS API Gateway, Kong) or load balancer (e.g., Nginx) with rate-limiting logic.

- Application Layer: Backend services (e.g., microservices on Kubernetes).

- Storage Layer: In-memory database like Redis for tracking request counts.

- Monitoring Layer: Prometheus for metrics, Grafana for visualization.

Diagram Layout:

[Clients] --> [API Gateway/Load Balancer (Rate Limiter)] --> [Backend Services]

| |

[Redis] [Prometheus/Grafana]

Integration Points with CI/CD or Cloud Tools

- CI/CD: Automate rate limit policy updates via infrastructure-as-code (e.g., Terraform for AWS API Gateway).

- Cloud Tools: Use AWS API Gateway, Google Cloud Armor, or Azure API Management for built-in rate limiting.

- Observability: Integrate with Prometheus for metrics and Grafana for dashboards.

Installation & Getting Started

Basic Setup or Prerequisites

- Environment: Linux server, Docker, or cloud platform (e.g., AWS, GCP).

- Tools: Nginx, Redis, or cloud-native rate-limiting service.

- Dependencies: Basic knowledge of HTTP, REST APIs, and command-line tools.

- Software:

- Nginx (for reverse proxy and rate limiting).

- Redis (for distributed counting).

- Docker (optional for containerized setup).

Hands-on: Step-by-Step Beginner-Friendly Setup Guide

This guide sets up rate limiting using Nginx and Redis on a Linux server.

- Install Nginx:

sudo apt update

sudo apt install nginx2. Install Redis:

sudo apt install redis-server3. Configure Nginx for Rate Limiting:

Edit /etc/nginx/nginx.conf to add rate-limiting directives:

http {

limit_req_zone $binary_remote_addr zone=mylimit:10m rate=10r/s;

server {

location /api/ {

limit_req zone=mylimit burst=20;

proxy_pass http://backend;

}

}

}limit_req_zone: Defines a shared memory zone for tracking requests.rate=10r/s: Limits to 10 requests per second.burst=20: Allows a burst of 20 additional requests, queued.

4. Restart Nginx:

sudo systemctl restart nginx5. Test Rate Limiting:

Use curl to send multiple requests:

for i in {1..30}; do curl http://localhost/api; doneExpect HTTP 429 responses after exceeding the limit.

6. Integrate Redis for Distributed Rate Limiting (optional):

Use lua-resty-limit-req for advanced rate limiting with Redis. Install the Lua module and configure:

http {

lua_shared_dict my_limit_store 10m;

server {

location /api/ {

access_by_lua_block {

local limit_req = require "resty.limit.req"

local lim, err = limit_req.new("my_limit_store", 10, 20)

local delay, err = lim:incoming()

if not delay then

return ngx.exit(429)

end

if delay > 0 then

ngx.sleep(delay)

end

}

proxy_pass http://backend;

}

}

}7. Monitor with Prometheus:

Install Prometheus and configure Nginx to expose metrics.

Real-World Use Cases

Scenario 1: API Protection in E-Commerce

- Context: An e-commerce platform experiences traffic spikes during sales.

- Application: Rate limiting on API endpoints (e.g.,

/checkout) to prevent server overload. - Implementation: AWS API Gateway with a 100 requests/second limit per user.

- Outcome: Stable checkout process during high traffic.

Scenario 2: Mitigating DDoS in Financial Services

- Context: A banking app faces DDoS attacks targeting login APIs.

- Application: Use Cloudflare’s rate limiting to block excessive requests from a single IP.

- Outcome: Reduced attack surface, maintaining service availability.

Scenario 3: Fair Resource Allocation in SaaS

- Context: A SaaS platform needs to ensure fair API usage among customers.

- Application: Token bucket rate limiting in Kong API Gateway, with per-customer quotas.

- Outcome: Equitable resource distribution, preventing overuse by large clients.

Scenario 4: Microservices in Media Streaming

- Context: A streaming service uses microservices for content delivery.

- Application: Rate limiting at the ingress controller (e.g., Istio) to protect backend services.

- Outcome: Consistent streaming quality during peak usage.

Benefits & Limitations

Key Advantages

- Scalability: Handles traffic spikes without overloading servers.

- Security: Mitigates DoS and brute-force attacks.

- Fairness: Ensures equitable resource access for users.

- Cost Efficiency: Reduces infrastructure costs by preventing over-provisioning.

Common Challenges or Limitations

- False Positives: Legitimate users may be blocked during bursts.

- Complexity: Distributed systems require synchronized rate limiting (e.g., Redis).

- Latency: Throttling can introduce delays for valid requests.

- Configuration Errors: Incorrect limits can degrade user experience.

Best Practices & Recommendations

Security Tips

- Use dynamic rate limits based on user roles (e.g., higher limits for premium users).

- Combine with WAF (Web Application Firewall) for layered security.

- Log all 429 responses for audit and analysis.

Performance

- Use in-memory storage like Redis for low-latency rate tracking.

- Optimize burst settings to balance user experience and system protection.

- Monitor rate-limiting metrics with tools like Prometheus.

Maintenance

- Regularly review rate limit policies to align with traffic patterns.

- Automate policy updates via CI/CD pipelines (e.g., Terraform).

- Test rate limits in staging environments before production.

Compliance Alignment

- Align with GDPR, HIPAA, or PCI-DSS by logging rate-limiting actions securely.

- Ensure transparency with users about rate-limiting policies.

Automation Ideas

- Use Infrastructure-as-Code to manage rate-limiting configurations.

- Implement auto-scaling rate limits based on real-time traffic analysis.

Comparison with Alternatives

| Feature | Rate Limiting (Token Bucket) | Circuit Breaker | Request Queuing |

|---|---|---|---|

| Purpose | Limits request rate | Halts requests on failure | Queues excess requests |

| Use Case | API protection, fairness | Fault isolation | Smooth traffic spikes |

| Complexity | Moderate | High | Low |

| Latency Impact | Low (if tuned) | High (on failure) | Moderate |

| Scalability | High (with Redis) | Moderate | High |

When to Choose Rate Limiting

- Use for APIs or services with predictable traffic patterns.

- Ideal for preventing abuse or ensuring fairness.

- Choose alternatives like circuit breakers for fault tolerance or queuing for bursty workloads.

Conclusion

Rate limiting is a cornerstone of SRE for maintaining system reliability, security, and fairness. By implementing robust rate-limiting strategies, organizations can protect their services from abuse, optimize resource usage, and enhance user experience. As systems scale and traffic patterns evolve, advancements like adaptive rate limiting and AI-driven policies will shape the future.

Next Steps

- Experiment with rate limiting in a sandbox environment.

- Explore cloud-native tools like AWS API Gateway or Kong.

- Monitor and tune rate limits based on real-world traffic.

Resources

- Official Docs: Nginx Rate Limiting, AWS API Gateway

- Communities: SRE forums on Reddit, CNCF Slack, or X (@SREcon)