Introduction & Overview

What is the Circuit Breaker Pattern?

The Circuit Breaker pattern is a design pattern used in software engineering to enhance the resilience and fault tolerance of distributed systems, particularly in microservices architectures. Inspired by electrical circuit breakers, which prevent circuit overload by interrupting current flow, the software Circuit Breaker pattern monitors service interactions and halts requests to a failing service to prevent cascading failures. It acts as a safety mechanism, ensuring system stability by isolating faults and allowing services time to recover.

History or Background

The Circuit Breaker pattern was popularized by Michael Nygard in his book Release It! (2007), where he introduced it as a strategy to prevent catastrophic cascades in distributed systems. The concept draws from electrical engineering, where circuit breakers protect circuits from overloads or short circuits. Netflix further advanced its adoption by developing the open-source Hystrix library in 2012, which implemented the Circuit Breaker pattern for their microservices architecture. Since then, libraries like Resilience4j, Polly, and service meshes like Istio have expanded its application in modern Site Reliability Engineering (SRE) practices.

Why is it Relevant in Site Reliability Engineering?

In SRE, maintaining system reliability and minimizing downtime are paramount. Distributed systems, common in cloud-native and microservices architectures, are prone to failures due to network latency, service unavailability, or resource exhaustion. The Circuit Breaker pattern is critical for:

- Preventing Cascading Failures: Stops a single service failure from propagating across the system.

- Improving Mean Time to Recovery (MTTR): Allows failing services to recover without being overwhelmed by repeated requests.

- Enhancing User Experience: Provides immediate feedback (e.g., fallback responses) instead of prolonged timeouts.

- Optimizing Resource Usage: Reduces futile operations on failing services, preserving system resources.

By integrating Circuit Breakers into SRE workflows, teams can achieve higher availability (e.g., meeting 99.999% uptime goals) and improve system resilience, aligning with SRE principles of automation and reliability.

Core Concepts & Terminology

Key Terms and Definitions

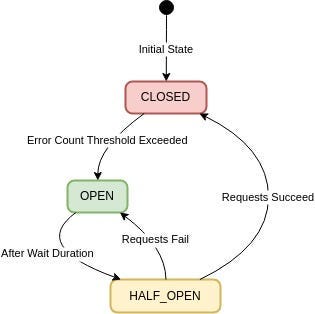

- Circuit Breaker: A software component that monitors service calls and “trips” to prevent further requests to a failing service.

- Closed State: The default state where requests pass through to the service, and failures are monitored.

- Open State: The state where the Circuit Breaker blocks requests to a failing service, returning errors or fallbacks immediately.

- Half-Open State: A transitional state where limited test requests are allowed to check if the service has recovered.

- Failure Threshold: The number of consecutive failures that trigger the Circuit Breaker to move to the Open state.

- Timeout Duration: The time the Circuit Breaker remains in the Open state before transitioning to Half-Open.

- Fallback Mechanism: Alternative responses (e.g., cached data or default values) provided when the Circuit Breaker is Open.

- Service Mesh: A layer (e.g., Istio) that manages service-to-service communication, often implementing Circuit Breakers.

| Term | Definition | Relevance in SRE |

|---|---|---|

| Closed State | All requests flow normally | Healthy system |

| Open State | Stops all requests to failing service | Prevents cascading failures |

| Half-Open State | Allows limited requests to test recovery | Controlled recovery |

| Fail-Fast | Immediate error response when breaker is open | Reduces latency |

| Fallback | Alternative response when service fails | Graceful degradation |

| SLI (Service Level Indicator) | Measure of reliability | Circuit breaker metrics |

| Error Budget | Allowed failure rate within SLO | Breakers enforce error budgets |

How It Fits into the Site Reliability Engineering Lifecycle

In the SRE lifecycle, the Circuit Breaker pattern aligns with several key phases:

- Design and Development: Incorporate Circuit Breakers into microservices or API gateways to ensure fault tolerance.

- Monitoring and Observability: Track Circuit Breaker states (Closed, Open, Half-Open) and failure rates to detect issues early.

- Incident Response: Use Circuit Breakers to isolate faults, reducing the blast radius during outages.

- Post-Incident Analysis: Analyze Circuit Breaker logs to understand failure patterns and improve configurations.

- Automation: Automate state transitions and fallback responses to minimize manual intervention.

Architecture & How It Works

Components

The Circuit Breaker pattern consists of the following components:

- Circuit Breaker Object: Wraps calls to a remote service, monitoring success/failure rates.

- State Machine: Manages transitions between Closed, Open, and Half-Open states based on failure thresholds and timeouts.

- Monitoring System: Tracks metrics like error rates, response times, and timeouts to inform state changes.

- Fallback Logic: Provides alternative responses when the Circuit Breaker is Open.

- Configuration Parameters: Includes failure threshold, timeout duration, and health check intervals.

Internal Workflow

- Closed State: All requests pass through to the service. The Circuit Breaker monitors failures (e.g., timeouts, exceptions).

- Failure Detection: If failures exceed the threshold (e.g., 5 consecutive failures), the Circuit Breaker trips to the Open state.

- Open State: Requests are blocked, and the Circuit Breaker returns errors or fallbacks. A timeout timer starts (e.g., 30 seconds).

- Half-Open State: After the timeout, limited test requests are sent. If successful, the Circuit Breaker resets to Closed; if not, it returns to Open.

- Monitoring and Alerts: Logs state changes and metrics for observability, triggering alerts if the Circuit Breaker trips.

Architecture Diagram Description

The architecture diagram illustrates the Circuit Breaker’s role in a microservices environment:

- API Gateway: Receives client requests and routes them to microservices.

- Circuit Breaker: Positioned between the API Gateway and a microservice (e.g., Payment Service). It monitors requests and manages state transitions.

- Service Discovery: Identifies available service instances.

- Fallback Mechanism: Provides cached responses or alternative workflows when the Circuit Breaker is Open.

- Monitoring System: Collects metrics (e.g., error rates) and sends alerts via tools like Prometheus or Azure Monitor.

- Microservices: Independent services (e.g., User Auth, Account) interacting via the Circuit Breaker.

[ Client Requests ]

│

▼

┌─────────────────┐

│ Circuit Breaker │

│ - State Mgmt │

│ - Thresholds │

│ - Metrics │

└─────────────────┘

│

┌───────────────┐

│ Service B │

│ (Dependency) │

└───────────────┘

Mermaid Diagram (text-based representation due to text-only output):

stateDiagram-v2

[*] --> Closed: Initial State

Closed --> Open: Failure Threshold Exceeded

Open --> HalfOpen: Timeout Expires

HalfOpen --> Closed: Success Threshold Exceeded

HalfOpen --> Open: Failure Occurs

Integration Points with CI/CD or Cloud Tools

- CI/CD Pipelines: Integrate Circuit Breaker libraries (e.g., Resilience4j) into application code during the build phase. Use automated tests to verify Circuit Breaker behavior.

- Cloud Tools:

- Service Meshes (Istio): Implement Circuit Breakers at the network level for traffic management.

- Azure Monitor: Collects telemetry data for Circuit Breaker state monitoring.

- AWS App Mesh: Provides built-in Circuit Breaker support for microservices.

- Prometheus/Grafana: Visualize Circuit Breaker metrics for real-time observability.

Installation & Getting Started

Basic Setup or Prerequisites

- Programming Language: Java, Python, or JavaScript (examples use Java with Resilience4j).

- Dependencies: Install a Circuit Breaker library (e.g., Resilience4j, Hystrix, or Polly).

- Monitoring Tools: Set up Prometheus, Grafana, or Azure Monitor for observability.

- Environment: A microservices-based application running on a cloud platform (e.g., AWS, Azure, Kubernetes).

- Development Tools: Maven/Gradle (for Java), pip (for Python), or npm (for JavaScript).

Hands-On: Step-by-Step Beginner-Friendly Setup Guide

This guide uses Resilience4j in a Java Spring Boot application.

- Add Dependencies:

Add Resilience4j to yourpom.xml(Maven):

<dependency>

<groupId>io.github.resilience4j</groupId>

<artifactId>resilience4j-spring-boot2</artifactId>

<version>2.2.0</version>

</dependency>2. Configure Circuit Breaker:

In application.yml, define Circuit Breaker settings:

resilience4j.circuitbreaker:

instances:

paymentService:

slidingWindowSize: 10

failureRateThreshold: 50

waitDurationInOpenState: 30000

permittedNumberOfCallsInHalfOpenState: 33. Implement Circuit Breaker in Code:

Wrap a service call with the Circuit Breaker:

import io.github.resilience4j.circuitbreaker.annotation.CircuitBreaker;

import org.springframework.stereotype.Service;

@Service

public class PaymentService {

@CircuitBreaker(name = "paymentService", fallbackMethod = "fallback")

public String processPayment(String orderId) {

// Simulate external service call

if (Math.random() > 0.5) {

throw new RuntimeException("Payment service unavailable");

}

return "Payment processed for order: " + orderId;

}

public String fallback(String orderId, Throwable t) {

return "Fallback: Payment service unavailable, try again later.";

}

}4. Test the Circuit Breaker:

Create a REST controller to test the service:

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.PathVariable;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class PaymentController {

private final PaymentService paymentService;

public PaymentController(PaymentService paymentService) {

this.paymentService = paymentService;

}

@GetMapping("/pay/{orderId}")

public String pay(@PathVariable String orderId) {

return paymentService.processPayment(orderId);

}

}5. Monitor with Prometheus:

Add Prometheus dependency and configure metrics:

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-registry-prometheus</artifactId>

<version>1.12.0</version>

</dependency> Access metrics at /actuator/prometheus.

6. Run and Test:

Start the Spring Boot application and send requests to http://localhost:8080/pay/testOrder. Observe Circuit Breaker state transitions in logs or Prometheus.

Real-World Use Cases

Scenario 1: E-Commerce Platform

An e-commerce platform relies on multiple payment gateways. If one gateway fails, the Circuit Breaker detects the failure and switches to an alternative gateway or displays a cached error message, ensuring the checkout process remains functional. Netflix uses this approach with Hystrix to manage payment service failures, maintaining user experience during outages.

Scenario 2: Financial Services

A banking application uses microservices for account management. If the account balance service fails due to database issues, the Circuit Breaker opens, serving cached balance data or a default response. This prevents cascading failures to the transaction service, ensuring compliance with uptime SLAs (e.g., 99.999%).

Scenario 3: Cloud-Native Applications

In a Kubernetes-based application using Istio, Circuit Breakers are configured at the service mesh layer to manage traffic to a recommendation service. If the service becomes unresponsive, Istio’s Circuit Breaker reroutes traffic to a fallback endpoint, reducing MTTR.

Scenario 4: IoT Systems

An IoT platform processes sensor data via microservices. If a data processing service fails due to high latency, the Circuit Breaker opens, queuing requests or providing default analytics, preventing system overload and ensuring data integrity.

Benefits & Limitations

Key Advantages

- Prevents Cascading Failures: Isolates faults to avoid system-wide outages.

- Optimizes Resources: Reduces futile requests to failing services.

- Improves Recovery Time: Allows services to recover without additional load.

- Enhances User Experience: Provides immediate feedback via fallbacks.

- Scalable: Works in microservices, cloud-native, and monolithic systems.

Common Challenges or Limitations

| Challenge | Description | Mitigation |

|---|---|---|

| Configuration Complexity | Setting appropriate thresholds and timeouts requires careful tuning. | Use monitoring tools to analyze failure patterns and adjust dynamically. |

| False Positives/Negatives | Overly sensitive or lenient settings can trip unnecessarily or miss failures. | Implement chaos engineering to test configurations. |

| Increased Code Complexity | Adding Circuit Breaker logic increases application complexity. | Use libraries like Resilience4j for simplified integration. |

| Partial Failures | May misinterpret partial failures as total failures in sharded systems. | Use mini Circuit Breakers for specific shards. |

Best Practices & Recommendations

Security Tips

- Secure Fallback Responses: Ensure fallback data does not expose sensitive information.

- Monitor Access: Restrict Circuit Breaker configuration changes to authorized personnel.

- Encrypt Communications: Use TLS for service calls to prevent data leaks.

Performance

- Optimize Thresholds: Use historical data to set failure thresholds and timeouts.

- Minimize Latency: Place Circuit Breakers close to the service call (e.g., in the API Gateway).

- Use Asynchronous Calls: Reduce blocking with async Circuit Breaker implementations.

Maintenance

- Regular Testing: Use chaos engineering to simulate failures and validate Circuit Breaker behavior.

- Logging and Alerts: Integrate with monitoring tools (e.g., Prometheus) to track state changes.

- Dynamic Adjustments: Leverage AI/ML for adaptive threshold tuning based on traffic patterns.

Compliance Alignment

- Ensure Circuit Breaker configurations align with industry standards (e.g., GDPR for data privacy).

- Document fallback responses for auditability in regulated industries like finance.

Automation Ideas

- Automate Circuit Breaker state transitions using service mesh tools like Istio.

- Integrate with CI/CD pipelines to test Circuit Breaker behavior during deployments.

- Use automated alerts for tripped Circuit Breakers to trigger incident response workflows.

Comparison with Alternatives

| Feature/Aspect | Circuit Breaker | Retry Pattern | Timeout Pattern | Bulkhead Pattern |

|---|---|---|---|---|

| Purpose | Prevents cascading failures by isolating failing services. | Retries failed operations to handle transient failures. | Limits wait time for service responses. | Isolates components to limit failure impact. |

| Mechanism | Monitors failures and blocks requests when a threshold is exceeded. | Re-executes failed calls with backoff strategies. | Enforces a maximum wait time for responses. | Separates resources into isolated pools. |

| Use Case | Microservices with frequent inter-service calls. | Transient network issues or temporary unavailability. | Services with variable response times. | Resource-intensive operations needing isolation. |

| Pros | Prevents system-wide outages, improves recovery. | Handles temporary failures effectively. | Prevents long waits, simple to implement. | Limits failure scope, protects critical resources. |

| Cons | Adds complexity, requires careful configuration. | May overload failing services. | May fail healthy requests prematurely. | Increases resource usage. |

| Tools/Libraries | Resilience4j, Hystrix, Istio | Polly, Resilience4j | Built-in language timeouts | Custom architecture design |

When to Choose Circuit Breaker

- Use when cascading failures are a risk in distributed systems.

- Prefer for microservices with high inter-service communication.

- Choose over Retry for non-transient failures or when services need recovery time.

- Opt for service mesh-based Circuit Breakers (e.g., Istio) in cloud-native environments.

Conclusion

Final Thoughts

The Circuit Breaker pattern is a cornerstone of resilient system design in SRE, enabling teams to manage failures effectively and maintain high availability. By isolating faults, optimizing resources, and enhancing user experience, it addresses the challenges of distributed systems. As systems grow more complex, integrating Circuit Breakers with modern tools like service meshes and AI-driven monitoring will become increasingly vital.

Future Trends

- AI-Driven Circuit Breakers: Use machine learning to dynamically adjust thresholds based on real-time data.

- Chaos Engineering Integration: Combine with chaos engineering for proactive failure testing.

- Serverless Architectures: Adapt Circuit Breakers for serverless environments to handle ephemeral service failures.

Next Steps

- Explore libraries like Resilience4j or service meshes like Istio for implementation.

- Experiment with chaos engineering tools (e.g., Chaos Monkey) to test Circuit Breaker configurations.

- Join SRE communities to share best practices and learn from real-world applications.

Links to Official Docs and Communities

- Resilience4j Documentation: https://resilience4j.readme.io/

- Istio Circuit Breaker Guide: https://istio.io/latest/docs/tasks/traffic-management/circuit-breaking/

- Hystrix (Legacy): https://github.com/Netflix/Hystrix

- SRE Community: https://sre.google/community/