Introduction & Overview

Metrics aggregation is a cornerstone of Site Reliability Engineering (SRE), enabling teams to monitor, analyze, and optimize the performance and reliability of complex systems. By collecting and summarizing numerical data over time, metrics aggregation provides actionable insights into system health, helping SREs detect issues, ensure uptime, and meet service-level objectives (SLOs). This tutorial offers an in-depth exploration of metrics aggregation, tailored for technical readers, with practical examples and best practices.

What is Metrics Aggregation?

Metrics aggregation involves collecting, processing, and summarizing numerical data (metrics) from systems, applications, or infrastructure to provide a high-level view of performance and behavior. Unlike detailed logs or traces, metrics are lightweight, time-series data points optimized for storage, querying, and visualization. In SRE, metrics aggregation is used to monitor key indicators like latency, error rates, and resource utilization, enabling proactive issue resolution.

History or Background

Metrics aggregation evolved alongside the growth of distributed systems and cloud computing. In the early 2000s, as systems became more complex, organizations like Google pioneered SRE practices, emphasizing the need for real-time, scalable monitoring. Tools like Nagios and Zabbix laid the groundwork, but modern solutions like Prometheus and InfluxDB introduced advanced time-series databases optimized for metrics aggregation. The rise of DevOps and microservices further solidified its importance, as teams required efficient ways to monitor dynamic, distributed environments.

Why is it Relevant in Site Reliability Engineering?

Metrics aggregation is critical in SRE for several reasons:

- Proactive Monitoring: Aggregated metrics help identify trends and anomalies before they escalate into outages.

- Error Budget Management: Metrics track error rates and uptime, informing decisions about balancing innovation and stability.

- Scalability: Aggregated data reduces storage and processing overhead, enabling monitoring of large-scale systems.

- Decision-Making: Dashboards and alerts built on aggregated metrics guide incident response and capacity planning.

Core Concepts & Terminology

Key Terms and Definitions

- Metric: A numerical measurement of system performance (e.g., CPU usage, request latency).

- Time-Series Data: Data points indexed by timestamps, used for tracking metrics over time.

- Aggregation: The process of summarizing metrics (e.g., averaging, summing, or counting) over a time window.

- Time-Series Database (TSDB): A database optimized for storing and querying time-series data (e.g., Prometheus, InfluxDB).

- Labels: Key-value pairs attached to metrics for filtering and aggregation (e.g.,

region=us-east). - Golden Signals: Key SRE metrics—latency, traffic, errors, and saturation—used to assess system health.

- Service-Level Indicators (SLIs): Measurable metrics that reflect user experience (e.g., request success rate).

- Service-Level Objectives (SLOs): Target values for SLIs, defining acceptable performance levels.

| Term | Definition | Example |

|---|---|---|

| Metric | Numeric measurement of system performance | CPU usage = 70% |

| Aggregation | Combining multiple metrics into summaries | Avg CPU usage across nodes |

| SLI (Service Level Indicator) | Measurable metric of system performance | Latency < 200ms |

| SLO (Service Level Objective) | Target for SLIs | 99.9% requests < 200ms |

| Time-Series Database (TSDB) | Stores metrics over time | Prometheus, InfluxDB |

| Labels/Tags | Key-value pairs for categorizing metrics | env=prod, region=us-east |

How It Fits into the Site Reliability Engineering Lifecycle

Metrics aggregation is integral to the SRE lifecycle:

- Design: Define SLIs and SLOs to guide system architecture.

- Development: Instrument code to emit metrics for monitoring.

- Deployment: Integrate metrics collection into CI/CD pipelines for real-time feedback.

- Monitoring: Use aggregated metrics to detect anomalies and trigger alerts.

- Incident Response: Analyze metrics to identify root causes and resolve issues.

- Postmortems: Review historical metrics to prevent recurrence of incidents.

Architecture & How It Works

Components

A metrics aggregation system typically includes:

- Metrics Source: Applications, servers, or infrastructure emitting raw metrics.

- Collector: A service that gathers metrics (e.g., Prometheus scraping endpoints).

- Time-Series Database: Stores and indexes metrics for efficient querying (e.g., InfluxDB, Prometheus).

- Querier: Processes queries for aggregated metrics, often with optimization techniques like caching.

- Visualization System: Displays metrics in dashboards (e.g., Grafana, Kibana).

- Alerting System: Triggers notifications based on predefined thresholds.

Internal Workflow

- Collection: Metrics sources (e.g., application endpoints) expose metrics in a standard format (e.g., Prometheus exposition format).

- Ingestion: Collectors pull (or receive pushed) metrics and forward them to the TSDB.

- Storage: The TSDB stores metrics with timestamps and labels, optimizing for time-based queries.

- Aggregation: The querier applies functions (e.g.,

sum,avg,rate) to summarize metrics. - Visualization/Alerting: Dashboards display trends, and alerts notify teams of anomalies.

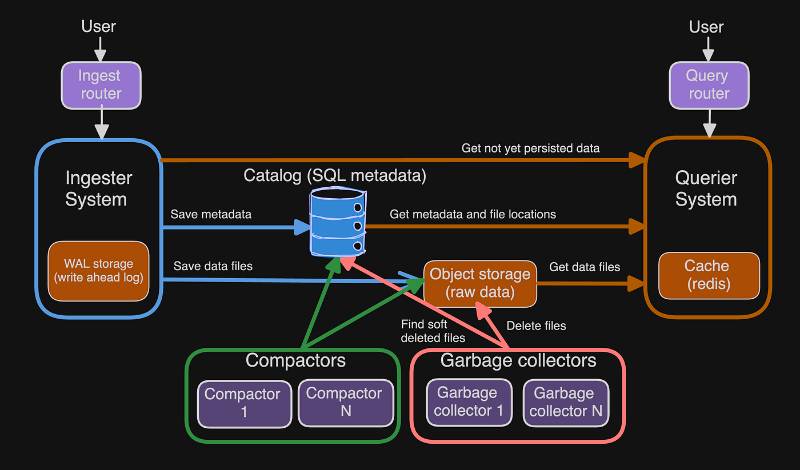

Architecture Diagram Description

The architecture diagram for a metrics aggregation system includes:

- Metrics Sources: Represented as nodes (e.g., servers, containers) emitting metrics.

- Collector: A central node (e.g., Prometheus server) pulling metrics via HTTP endpoints.

- TSDB: A database node storing time-series data, connected to the collector.

- Querier: A module within or alongside the TSDB, handling query execution.

- Visualization: A dashboard tool (e.g., Grafana) connected to the TSDB for real-time graphs.

- Alerting: An alerting manager (e.g., Prometheus Alertmanager) sending notifications via email/SMS.

- Queue (Optional): A buffer (e.g., Kafka) to handle high-throughput metrics and prevent data loss.

Diagram Flow:

[Application/Service] --> [Metrics Exporter] --> [Collector/Agent]

--> [Aggregation Layer (Prometheus/InfluxDB)]

--> [Visualization (Grafana)] + [Alerting (Alertmanager)]

Integration Points with CI/CD or Cloud Tools

- CI/CD: Metrics collectors integrate with CI/CD pipelines (e.g., Jenkins, GitLab) to monitor deployment performance and rollback if SLIs degrade.

- Cloud Tools: Cloud providers like AWS (CloudWatch), Azure (Monitor), and GCP (Stackdriver) offer native metrics aggregation. Prometheus can scrape cloud metrics via exporters.

- Container Orchestration: Kubernetes exporters expose pod and node metrics for aggregation.

Installation & Getting Started

Basic Setup or Prerequisites

- System Requirements: Linux/macOS/Windows, 4GB RAM, 10GB storage.

- Dependencies: Docker (for containerized setup), a programming environment (e.g., Python, Go) for custom metrics.

- Network: Open ports for metrics endpoints (e.g., 9090 for Prometheus).

- Tools: Prometheus, Grafana, and a metrics-emitting application.

Hands-On: Step-by-Step Beginner-Friendly Setup Guide

This guide sets up a Prometheus and Grafana stack to aggregate and visualize metrics.

- Install Docker:

sudo apt-get update

sudo apt-get install docker.io2. Deploy Prometheus:

Create a prometheus.yml configuration file:

global:

scrape_interval: 15s

scrape_configs:

- job_name: 'example-app'

static_configs:

- targets: ['host.docker.internal:8080']Run Prometheus in a Docker container:

docker run -d -p 9090:9090 -v $(pwd)/prometheus.yml:/etc/prometheus/prometheus.yml prom/prometheus

3. Set Up a Sample Application:

Use a Python app with Prometheus client library to emit metrics:

from prometheus_client import start_http_server, Counter

import time

request_count = Counter('app_requests_total', 'Total requests')

start_http_server(8080)

while True:

request_count.inc()

time.sleep(1)Run the app:

pip install prometheus_client

python app.py

4. Install Grafana:

docker run -d -p 3000:3000 grafana/grafana5. Configure Grafana:

- Access Grafana at

http://localhost:3000(default login: admin/admin). - Add Prometheus as a data source (URL:

http://localhost:9090). - Create a dashboard to visualize

app_requests_totalmetrics.

6. Verify Setup:

- Check Prometheus UI at

http://localhost:9090for scraped metrics. - View Grafana dashboard for request count trends.

Real-World Use Cases

Scenario 1: E-Commerce Platform Monitoring

- Context: An e-commerce platform tracks checkout latency to ensure a smooth user experience.

- Application: Prometheus aggregates latency metrics from microservices, and Grafana dashboards display 95th percentile latency. Alerts trigger if latency exceeds SLOs (e.g., 500ms).

- Outcome: SREs identify slow database queries, optimize them, and reduce checkout latency by 20%.

Scenario 2: Cloud-Native Application

- Context: A Kubernetes-based application monitors pod resource usage.

- Application: Prometheus scrapes Kubernetes metrics via exporters, aggregating CPU/memory usage. Alerts notify teams of saturation risks.

- Outcome: Autoscaling rules are adjusted, preventing resource exhaustion during traffic spikes.

Scenario 3: Financial Services Error Tracking

- Context: A banking application monitors transaction error rates to meet regulatory SLAs.

- Application: Metrics aggregation tracks HTTP 500 errors across APIs. An error budget is enforced to balance feature releases and reliability.

- Outcome: Error rate stays below 0.1%, ensuring compliance and user trust.

Scenario 4: Streaming Service Availability

- Context: A video streaming platform ensures 99.99% uptime.

- Application: InfluxDB aggregates availability metrics from CDN nodes, and alerts trigger on downtime. Dashboards show regional performance trends.

- Outcome: Rapid detection of CDN outages reduces downtime by 30%.

Benefits & Limitations

Key Advantages

- Efficiency: Metrics are lightweight, reducing storage and query costs compared to logs/traces.

- Real-Time Insights: Enables proactive issue detection with minimal latency.

- Scalability: Handles high-throughput data in distributed systems.

- Flexibility: Supports custom metrics and complex aggregations (e.g.,

rate(),sum()).

Common Challenges or Limitations

- Data Loss Risk: High-throughput systems may lose data if TSDB is unavailable without a queue.

- Complexity: Configuring and maintaining aggregation pipelines can be complex for large systems.

- Limited Context: Metrics lack the detailed context of logs or traces, requiring integration for root cause analysis.

- High Cardinality: Excessive labels can degrade TSDB performance.

| Aspect | Benefit | Limitation |

|---|---|---|

| Storage Efficiency | Lightweight, optimized for time-series | High cardinality impacts performance |

| Real-Time Monitoring | Fast anomaly detection | Lacks detailed context for debugging |

| Scalability | Handles large-scale systems | Complex setup for distributed systems |

| Flexibility | Supports custom metrics and queries | Risk of data loss without queues |

Best Practices & Recommendations

Security Tips

- Secure Endpoints: Restrict metrics endpoints with authentication (e.g., OAuth, API keys).

- Encrypt Data: Use TLS for metrics transmission to prevent eavesdropping.

- Least Privilege: Limit TSDB and collector access to authorized services only.

Performance

- Optimize Labels: Avoid high-cardinality labels (e.g., user IDs) to reduce TSDB load.

- Efficient Queries: Use query optimization techniques like caching and pre-aggregation.

- Queueing: Implement a buffer (e.g., Kafka) for high-throughput metrics to prevent data loss.

Maintenance

- Regular Reviews: Periodically assess metrics relevance to avoid outdated data.

- Retention Policies: Set TSDB retention periods (e.g., 30 days) to manage storage.

- Automation: Automate metrics collection and alerting setup via Infrastructure-as-Code (e.g., Terraform).

Compliance Alignment

- Regulatory Compliance: Ensure metrics capture SLIs required for regulations (e.g., GDPR, HIPAA).

- Audit Trails: Log metrics access and modifications for compliance audits.

Comparison with Alternatives

Alternatives

- Logging: Captures detailed event data but is storage-intensive and slower to query.

- Tracing: Tracks request flows across systems but is complex and resource-heavy.

- Cloud-Native Solutions: AWS CloudWatch, Azure Monitor, or GCP Stackdriver offer managed metrics aggregation but may lack customization.

Comparison Table

| Tool/Approach | Pros | Cons | Best Use Case |

|---|---|---|---|

| Metrics Aggregation | Lightweight, scalable, real-time | Limited context, complex setup | Monitoring system health, SLIs/SLOs |

| Logging | Detailed context, good for debugging | High storage cost, slow queries | Root cause analysis |

| Tracing | Tracks request flows, distributed systems | Resource-intensive, complex | Debugging microservices |

| Cloud-Native (e.g., CloudWatch) | Managed, easy setup | Less customizable, vendor lock-in | Cloud-based applications |

When to Choose Metrics Aggregation

- Choose Metrics Aggregation: For real-time monitoring, scalability, and SLO tracking in distributed systems.

- Choose Alternatives: Use logging for detailed debugging, tracing for microservices, or cloud-native solutions for fully managed environments.

Conclusion

Metrics aggregation is a vital practice in SRE, enabling teams to maintain reliable, scalable systems through real-time insights and proactive monitoring. By aggregating key metrics like latency, errors, and saturation, SREs can ensure systems meet SLOs and deliver exceptional user experiences. Future trends include AI-driven anomaly detection and tighter integration with observability platforms like OpenTelemetry.

Next Steps:

- Experiment with Prometheus and Grafana for hands-on experience.

- Explore advanced TSDB features like query federation for large-scale systems.

- Join SRE communities (e.g., CNCF, SREcon) to stay updated.

Resources:

- Prometheus Official Documentation

- Grafana Documentation

- OpenTelemetry