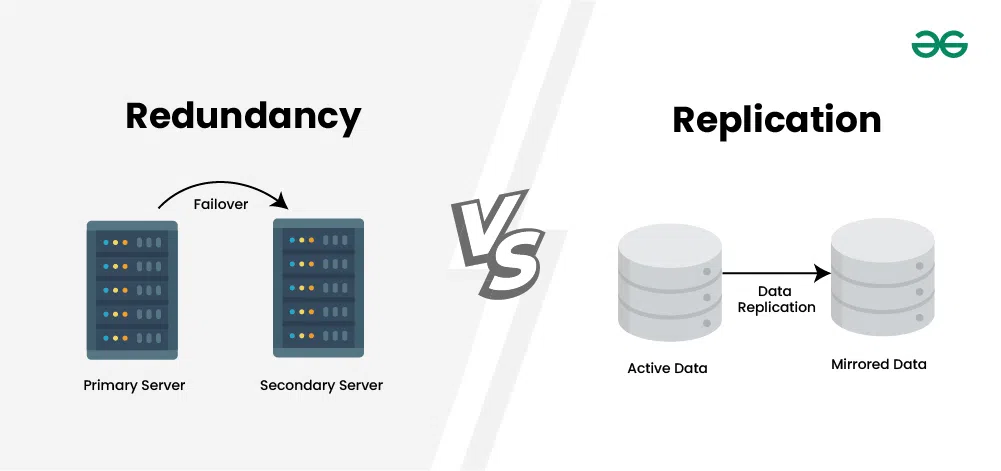

Redundancy is the deliberate duplication of critical components or paths so that a failure doesn’t violate your SLOs. Put simply: remove single points of failure (SPOFs) and make sure something else can take over fast enough that users don’t notice.

Where you add redundancy (failure domains)

- Process / pod: multiple workers for the same service.

- Host / node: more than one VM/node per service tier.

- Availability Zone (AZ): replicas spread across ≥2 AZs.

- Region: active-active or active-passive between regions.

- Vendor: multi-provider or alternate managed service (only when justified).

Common patterns

- N+1 / N+M: have at least one (or M) spare capacity unit beyond steady-state needs.

- 2N (“mirrored”): two full-capacity stacks; either can serve 100%.

- Active-active: all sites handle traffic; failover is mostly automatic and fast.

- Active-passive: a hot/warm standby takes over on failure (some failover time).

- Quorum-based replication: e.g., 3 or 5 nodes (Raft/Paxos) so a majority can proceed.

- Erasure coding / parity: data survives disk/node loss without full duplication.

How redundancy improves reliability

If one replica has availability A, two independent replicas behind a good load balancer have availability ≈ 1 – (1–A)² (and so on), assuming independent failures. Correlation kills this benefit—so separate replicas across failure domains (different AZs/regions, power, network, versions).

Design principles

- Eliminate SPOFs: control planes, queues, caches, secrets stores, DNS, load balancers, and CI/CD paths all need redundancy or fast recovery.

- Isolate failure domains: spread replicas across AZs; don’t co-locate primaries and standbys.

- Diversity beats duplication: different versions, hardware, or providers reduce correlated risk.

- Automate failover: health checks, timeouts, circuit breakers, and quick DNS/LB re-routing.

- Right-size capacity: spare headroom for failover (e.g., N+1) and pre-scale if needed.

Trade-offs & pitfalls

- Cost vs reliability: more replicas, more money. Tie decisions to SLO/error-budget math.

- Complexity: multi-region state is hard (consistency, latency, split-brain).

- Hidden coupling: two “redundant” services sharing one database = still a SPOF.

- False redundancy: two pods on one node or one AZ adds little resilience.

What to monitor to prove redundancy works

- Per-AZ/region health and synthetic checks (not just aggregate).

- Failover time (MTTR) and success rate of automated promotions.

- Quorum / ISR health (for Kafka/etcd/Consul), replication lag, and RPO/RTO.

- Capacity headroom after a node/AZ loss (can you still meet SLO?).

Test it (don’t just hope)

- Game days / chaos experiments: kill a node, drain an AZ, sever a NAT gateway, block a dependency; verify traffic stays healthy and alerts are actionable.

- Runbooks & drills: promote replicas, restore from backups, and rehearse DNS/LB failover.

Concrete examples (EKS/AWS flavored)

- Stateless services:

replicas: 3+, PodDisruptionBudget, Pod Topology Spread across 3 AZs, HPA with spare headroom; ALB/NLB across subnets in all AZs. - Stateful stores:

- RDS/Aurora Multi-AZ, cross-region replica for DR; test failovers.

- Kafka (or MSK/Confluent): replication factor ≥3,

min.insync.replicas=2, rack-aware across AZs. - Redis/ElastiCache: cluster mode enabled with multi-AZ, automatic failover.

- Storage & DNS: S3 with versioning + (if needed) cross-region replication; Route 53 health-check + failover/latency records.

- Control plane dependencies: multiple NAT gateways (per AZ), duplicate VPC endpoints for critical services, redundant CI runners, dual logging/metrics paths when feasible.

Quick checklist

- Do we meet capacity with one node/AZ down?

- Are replicas spread across AZs and enforced by policy?

- Is failover automatic, observed, and rehearsed?

- Are dependencies (DB, cache, queue, DNS, secrets) redundant too?

- Do monitors alert on loss of redundancy (e.g., quorum at risk), not just total outage?

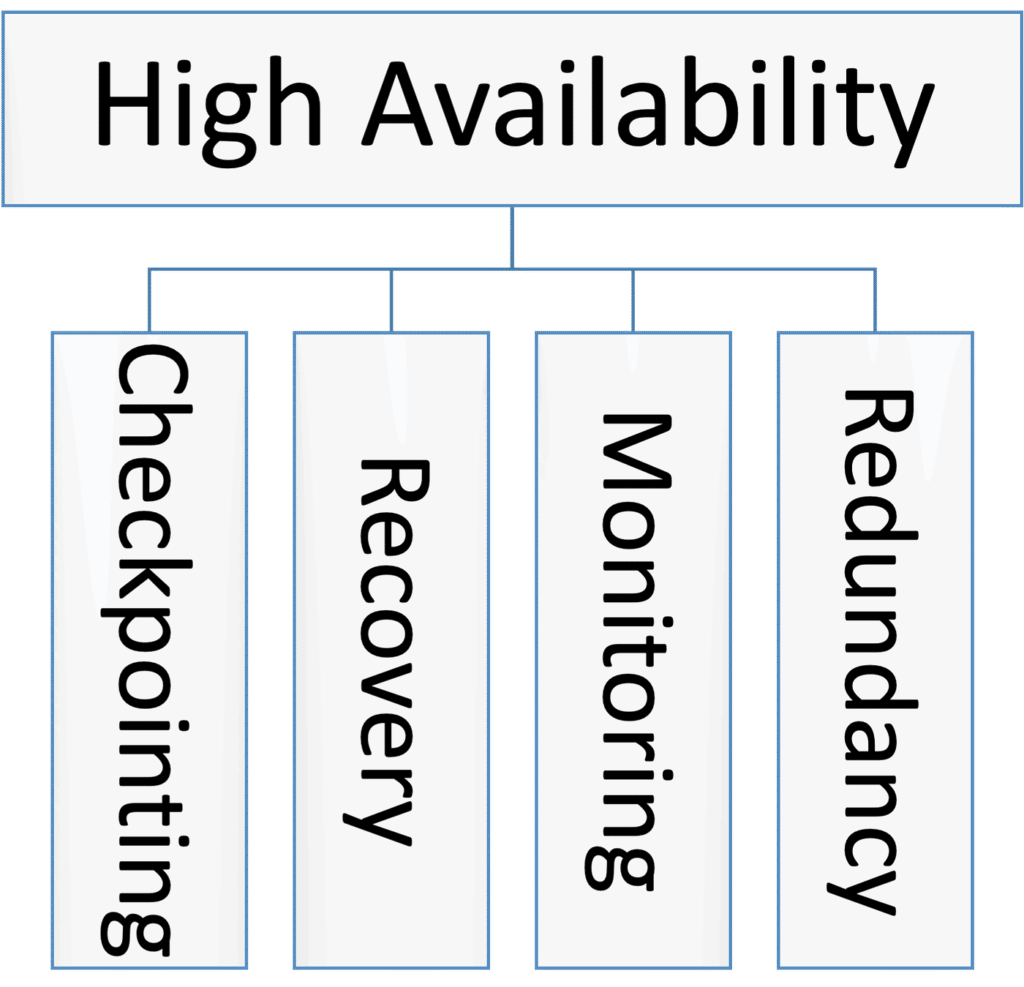

4 Pillors of High Availability